Software testing is vital in software development and maintenance. With a proper testing strategy, you can ensure the quality and reliability of your product. It’s not just the QA team that has to ensure the quality of the product; developers also need to write unit tests to ensure that the code they are shipping is working as per the requirement. Everything seems fine until your tests start failing, and you start debugging those tests to identify the root cause. Unfortunately, not all tests give consistent results and may fail without code change, also known as flaky tests. Flaky tests can occur in codebases of any size, and many tech giants, such as Facebook and Google have reportedly been plagued by it. Flaky tests can cause issues for software development teams, affecting the reliability of the test suite. This article explores practical strategies for identifying and reducing flaky tests to enhance testing processes.

What are flaky tests?

Tests are typically deterministic, meaning they will always pass or fail for the same code. However, some tests are non-deterministic, also known as flaky tests. A test is flaky if it shows different behavior with the same code under the same environment. Flaky tests are like hidden landmines that can detonate unexpectedly and disrupt your testing process. It makes them problematic because they can show inconsistent results and undermine the reliability of the testing process.

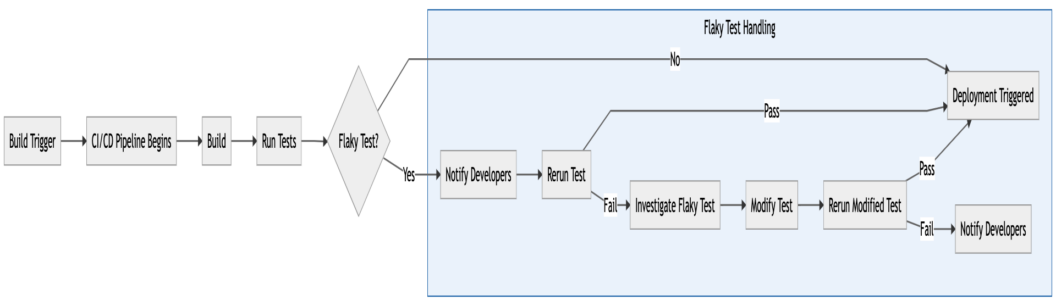

The following image represents a typical CI/CD flow when a flaky test is detected in the nightly build pipeline:

- The CI pipeline is triggered at a specific time.

- Code is compiled and built.

- CI runs the tests, and developers are notified if there is a failure.

- If there are no failures, the code is deployed to the target environment.

- If a test fails, it is rerun to verify the result.

- If the rerun test still fails, it suggests that it is flaky, and then developers investigate the underlying cause and refactor the code to fix the test flaky test.

- Rerun the modified test to verify that flakiness is resolved.

- The code is deployed to the target environment if the test passes.

Best Practices to Fix Flaky Tests

While no silver bullets exist, you can use the following best practices to reduce the number of flaky tests in your development process.

Regular Test Maintenance

It is a good practice to schedule routine reviews of the test suite. This can eliminate redundant or unnecessary tests. It may happen that some of them were already flaky, so getting rid of those tests is a good thing. Regular test maintenance proactively addresses flaky tests and ensures the test suite remains healthy and reliable. By periodically examining test results, recognizing issues, and handling them promptly, we ensure that tests are up to date and that we don’t compromise on their quality.

Ignore it

Ignoring or disabling a flaky test is tempting, but you should think logically before using this approach. Ideally, you should not ignore or delete them because it’s not like they are constantly turning your CI pipeline red. These decisions are often made when a flaky test in CI is blocking your release pipeline. You also have to understand that there is some functionality that a flaky test is testing, and that functionality works in cases when the CI pipeline is green. In Java, if you use the JUnit testing framework, you can use the @Disabled annotation to avoid running a particular test if you think it’s flaky. Similar functionality is available with most language test frameworks. However, you need to realize that this is just a short-term measure, and eventually, we must deal with that flaky test.

Isolation and Independence

To ensure accuracy, it is recommended that your tests be independent and isolated from external dependencies. If you do not do this, even though tests are functionally working correctly, they may fail just because something is wrong with that dependency. One popular test pattern for achieving isolation and independence is the Hermetic test pattern.

According to this pattern, each test should be self-sufficient, and any dependency on any test or third-party API that is not in your control should be avoided.

In traditional shared testing environments, fixing flakiness in your tests is challenging due to the unavailability or issues with external dependencies(e.g., network issues). However, a hermetic testing environment is consistent, isolated, and deterministic, generating predictable test results. Think of it like a container running everything required for your tests.

Timeout Strategies

Microservices have made end-to-end testing complex because your service now has numerous dependencies. To test such a complex setup, you need to rely on timeouts because your tests may hang indefinitely if you don’t get a response in time. You must configure a timeout strategy in your test to save resources, time, and effort.

This approach also has issues. For example, there is a long delay in a call to an external system, and since you have configured a timeout, the test will fail after the wait time exceeds the timeout. The test will be flagged as a flaky test. To fix this, you can rely on creating mocks for such external API calls where you have observed a delay in response, or the external system itself is frequently unavailable.

Randomized Test Order

Randomized test order is another approach to uncover dependencies between tests and flakiness issues related to test execution order. Let us understand this with an example.

In the following code, the output of testC depends upon the execution order of testB. If testC is executed sequentially after testB, then testC will pass. Otherwise, if the test execution order is randomized and testB executes after testC, then testC will fail.

import org.junit.Test;

import static org.junit.Assert.assertEquals;

public class RandomOrderTest {

private static int num = 0;

@Test

public void testA() {

assertEquals(1, num + 1);

}

@Test

public void testB() {

num = 10;

assertEquals(12, num + 2);

}

@Test

public void testC() {

assertEquals(13, num + 3); // Passes if testB runs before testC, otherwise fails

}

}To fix these flaky tests, you should stop sharing their state. If you have a complex test setup and have to share state between tests, at least reset them after each run. Ideally, each test should tear up and tear down its environment independently. In Junit, you can use the @BeforeEach annotation to set the initial state before running each test method.

For example, you can fix the flakiness in the previous code sample by resetting the shared state before each test method is executed. Since we are resetting the state of the shared variable before each test, we need to slightly adjust testC to ensure it is independent of the test execution order of other tests.

@BeforeEach

public void setUp() {

num = 0; // Reset shared state before each test

}

@Test

public void testC() {

int actualResult = num + 13;

assertEquals(13, actualResult);

}Continuous Monitoring

Most modern CI tools provide enough metrics to discover patterns in test failures. Some CI tools also allow you to rerun your test automatically using a cron scheduler. Scheduling allows you to run your builds at different intervals to gain more insights and identify failure patterns and root causes. These insights can help in fixing your tests. If your CI allows it, you can enable alerts for sudden increases in test flakiness.

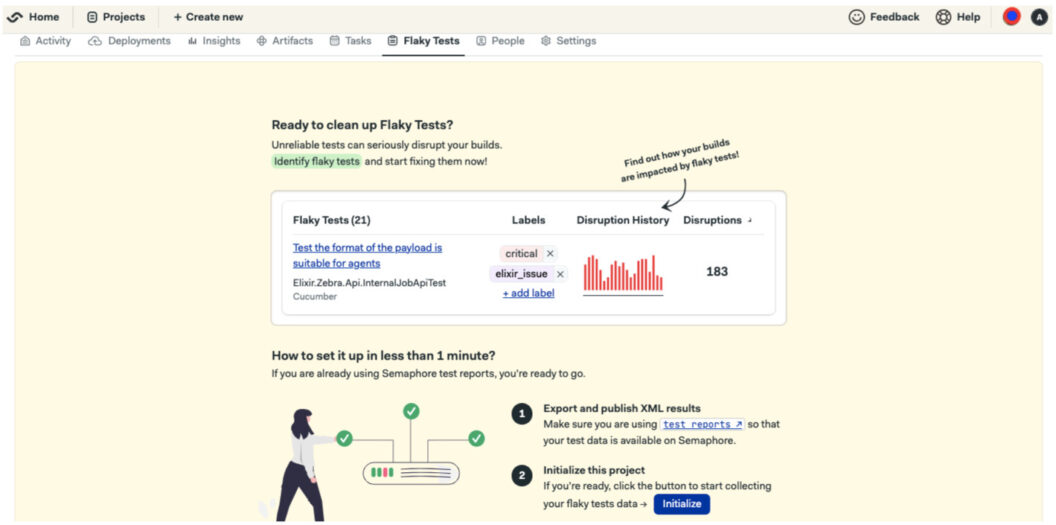

Talking about CI, Semaphore CI offers flaky test detection to manage unreliable tests in your entire test suite.

To initialize flaky test detection, find your project in Semaphore and:

- Select the Flaky tests tab.

- Click Initialize.

Semaphore recently announced the open beta of their Flaky Tests Dashboard. It is a specialized tool engineered to combat the unpredictability of flaky tests in your CI/CD pipeline.

Environment Consistency

When dealing with flakiness in your tests, you should ensure consistency in test environments. By using containerization or virtualization, you can replicate the environment accurately and resolve environment-specific issues promptly. Since we are talking about consistency in a test environment, you can use Testcontainers, a testing library, to deal with environmental disparity and gain confidence in your tests.

You also must admit that modern software development is becoming complex, and your tech stack typically consists of various tools and technologies. At a minimum, there will be a messaging queue, a reverse proxy, and multiple databases. Now, for testing, instead of relying on mock services or in-memory implementations of these tools, you can use Testcontainers, which spins up a Docker container for that dependency and gives you consistent results.

Configure Automatic Retries

The most common approach a developer can take to resolve a flaky test is retrying it automatically. This doesn’t seem like a fix because running it repeatedly means you are just trying your luck and hoping it may pass. The benefit of this approach is that you don’t have to go through a manual process of identifying and re-running the failed test. Junit5, a testing framework for Java, provides an annotation @RepeatedTest for scenarios like dealing with a flaky test.

public class MyRepeatedTest {

@RepeatedTest(3) // Run the test method 3 times

void repeatedTest() {

// Add your logic

}

}Conclusion

In conclusion, flaky tests can significantly challenge software development teams, sabotaging the reliability of testing processes and slowing development and release cycles. Nevertheless, by enforcing best practices such as regular test maintenance, isolating and making tests independent, and implementing timeout strategies, teams can lower the number of flaky tests and ensure the quality and reliability of their testing suite. By investing in effective testing strategies, we can help teams deliver high-quality software that meets user requirements and expectations.