Commercial AI and Large Language Models (LLMs) have one big drawback: privacy! We cannot benefit from these tools when dealing with sensitive or proprietary data.

This brings us to understanding how to operate private LLMs locally. Open-source models offer a solution, but they come with their own set of challenges and benefits.

Join me in my quest to discover a local alternative to ChatGPT that you can run on your own computer.

Setting Expectations

Open-source is vast, with thousands of models available, varying from those offered by large organizations like Meta to those developed by individual enthusiasts. Running them, however, presents their own set of challenges:

- They might require robust hardware: plenty of memory and possibly a GPU

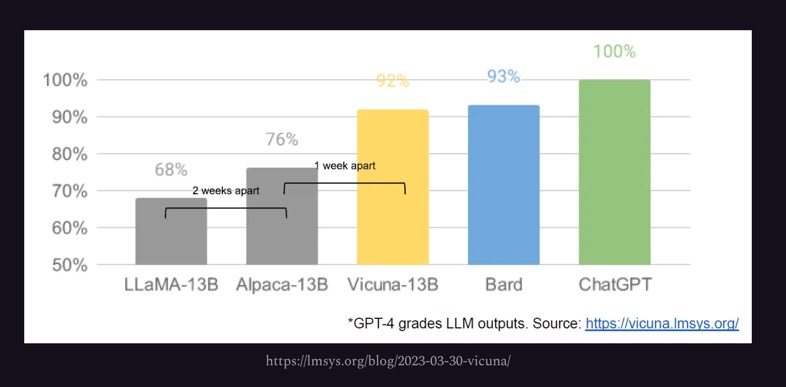

- While open-source models are improving, they typically don’t match the capabilities of more polished products like ChatGPT, which benefits from the support of a large team of engineers.

- Not all models can be used commercially.

The gap between open and closed-source models is narrowing, as a leaked document from Google suggested.

1. Hugging Face and Transformers

Hugging Face is the Docker Hub equivalent for Machine Learning and AI, offering an overwhelming array of open-source models. Fortunately, Hugging Face regularly benchmarks the models and presents a leaderboard to help choose the best models available.

Hugging Face also provides transformers, a Python library that streamlines running a LLM locally. The following example uses the library to run an older GPT-2 microsoft/DialoGPT-medium model. On the first run, the Transformers will download the model, and you can have five interactions with it. The script requires also PyTorch to be installed.

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium", padding_side='left')

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

# source: https://huggingface.co/microsoft/DialoGPT-medium

# Let's chat for 5 lines

for step in range(5):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

# pretty print last output tokens from bot

print("DialoGPT: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))Transformers pros:

- Automatic model downloads

- Code snippets available

- Ideal for experimentation and learning

Transformers cons:

- Requires solid understanding of ML and NLP

- Coding and configuration skills are necessary

2. LangChain

Another way we can run LLM locally is with LangChain. LangChain is a Python framework for building AI applications. It provides abstractions and middleware to develop your AI application on top of one of its supported models. For example, the following code asks one question to the microsoft/DialoGPT-medium model:

from langchain.llms.huggingface_pipeline import HuggingFacePipeline

hf = HuggingFacePipeline.from_model_id(

model_id="microsoft/DialoGPT-medium", task="text-generation", pipeline_kwargs={"max_new_tokens": 200, "pad_token_id": 50256},

)

from langchain.prompts import PromptTemplate

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate.from_template(template)

chain = prompt | hf

question = "What is electroencephalography?"

print(chain.invoke({"question": question}))LangChain Pros:

- Easier model management

- Useful utilities for AI application development

LangChain Cons:

- Limited speed, same as Transformers

- You must still code the application’s logic or create a suitable UI.

3. Llama.cpp

Llama.cpp is a C and C++ based inference engine for LLMs, optimized for Apple silicon and running Meta’s Llama2 models.

Once we clone the repository and build the project, we can run a model with:

$ ./main -m /path/to/model-file.gguf -p "Hi there!"Llama.cpp Pros:

- Higher performance than Python-based solutions

- Supports large models like Llama 7B on modest hardware

- Provides bindings to build AI applications with other languages while running the inference via Llama.cpp.

Llama.cpp Cons:

- Limited model support

- Requires tool building

4. Llamafile

Llamafile, developed by Mozilla, offers a user-friendly alternative for running LLMs. Llamafile is known for its portability and the ability to create single-file executables.

Once we download llamafile and any GGUF-formatted model, we can start a local browser session with:

$ ./llamafile -m /path/to/model.ggufLlamafile pros:

- Same speed benefits as Llama.cpp

- You can build a single executable file with the model embedded

Llamafile cons:

- The project is still in the early stages

- Not all models are supported, only the ones Llama.cpp supports.

5. Ollama

Ollama is a more user-friendly alternative to Llama.cpp and Llamafile. You download an executable that installs a service on your machine. Once installed, you open a terminal and run:

$ ollama run llama2Ollama will download the model and start an interactive session.

Ollama pros:

- Easy to install and use.

- Can run llama and vicuña models.

- It is really fast.

Ollama cons:

- Provides limited model library.

- Manages models by itself, you cannot reuse your own models.

- Not tunable options to run the LLM.

- No Windows version (yet).

6. GPT4ALL

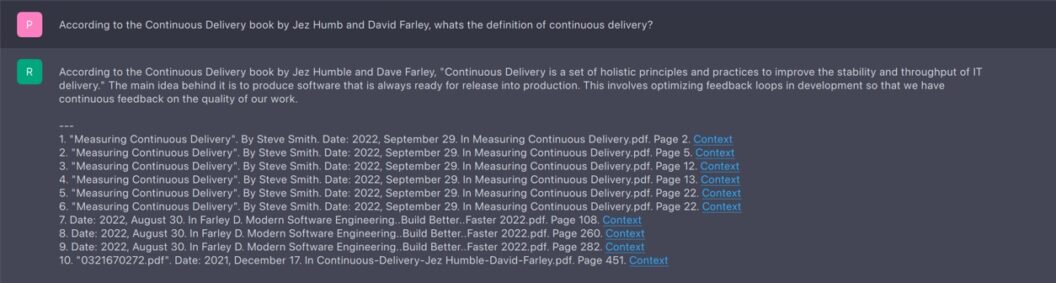

GPT4ALL is an easy-to-use desktop application with an intuitive GUI. It supports local model running and offers connectivity to OpenAI with an API key. It stands out for its ability to process local documents for context, ensuring privacy.

Pros:

- Polished alternative with a friendly UI

- Supports a range of curated models

Cons:

- Limited model selection

- Some models have commercial usage restrictions

Conclusion

Choosing the right tool to run an LLM locally depends on your needs and expertise. From user-friendly applications like GPT4ALL to more technical options like Llama.cpp and Python-based solutions, the landscape offers a variety of choices. Open-source models are catching up, providing more control over data and privacy.

This guide offers clarity in navigating the world of local LLMs. As these models evolve, they promise to become even more competitive with products like ChatGPT.

what about text-generation-webui ? It runs locally.

Oustandingly clear and easy to follow piece of work chap, well done and many thanks for your time spent & expertise shared – very grateful.

First time running LLMs locally! Thank you!

This video was particularly helpful! Thank you!

Awesome information! Thank you.