Handling flaky tests in software development can be a tricky business, specially for tests that fail a small percentage of the times. The most reliable way we have to detect flaky tests is to retry the test suite several times.

Finding Flaky Tests: To Retry or Not To Retry?

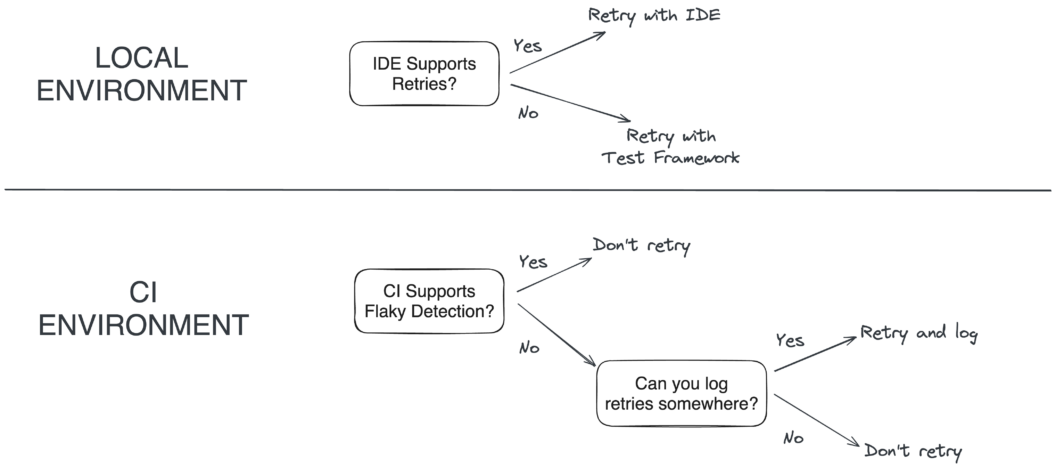

The decision to retry these tests depends on the environment you’re working in.

Local Development

In local environments, to retry flaky tests can be beneficial as it allows developers to identify and address transient errors. Most Integrated Development Environments (IDEs) support running tests directly, providing immediate feedback. Alternatively, most test frameworks offer configuration options to automate retries, helping to smooth over these intermittent issues.

Continuous Integration Environments

For CI environments, the approach is more nuanced. If your CI platform has specific support to retry flaky tests, such as a flaky test dashboard, it’s better to let tests fail and use these tools to track and fix them. This ensures that flaky tests are not hidden but rather highlighted for further investigation. However, if you can log test failures for later analysis without retrying, this could also be a viable approach. Generally, if you lack the tools to properly track and analyze flaky tests, avoiding retries in CI environments is advisable to ensure that every test accurately reflects the state of the code.

How to Configure Retry For Flaky Test Detection

JavaScript and TypeScript with Jest

For JavaScript and Types testing using Jest, you can configure retries directly in your Jest configuration. First, we create a small initialization file at the root of our project:

// retry-tests.js

jest.retryTimes(5, {logErrorsBeforeRetry: true});Then, we load it in jest.config.js:

// jest-config.js

module.exports = {

setupFilesAfterEnv: ['<rootDir>/retry-tests.js'],

reporters: [ "default" ]

};This is useful for automatically rerunning failed tests a specific number of times, with options to log errors before each retry.

We can also retry specific files and test by adding jest.retryTimes to the test file, for example:

jest.retryTimes(5, {logErrorsBeforeRetry: true});

test('Flaky Test', () => {

const value = Math.random()

expect(value).toBeGreaterThan(0.5)

})The option logErrorsBeforeRetry will make Jest show the error on the console when the test begins to flake.

Ruby with RSpec-Retry

In Ruby, using the RSpec framework, flaky tests can be managed by installing the rspec-retry gem.

$ gem install rspec rspec-retry

$ rspec --initNext, we need enable the gem in the spec/spec_helper.rb file by adding:

require 'rspec/retry'Finally, in the RSpec.configure do |config| section add the following lines:

RSpec.configure do |config|

config.verbose_retry = true

config.display_try_failure_messages = true

config.default_retry_count = 20

# rest of the config ...

endThis will make RSpec retry up to 20 times failed tests.

Alternatively, you can specify the number of retries directly in your tests, giving flaky tests several chances to pass before being marked as failures.

describe "Flaky Test" do

it 'should randomly succeed', :retry => 10 do

expect(rand(2)).to eq(1)

end

endYou can also override the default number of retries by changing the RSPEC_RETRY_RETRY_COUNT environment variable:

$ export RSPEC_RETRY_RETRY_COUNT=20Python with Pytest-Retry and FlakeFinder

The PyTest framework provides two switched to re-run failed tests:

pytest --lf: re-run last failed tests onlypytest --ff: re-run all test, failed tests first

So out of the box we get decent retry features. But in order to have PyTest automatically retry failed tests without running additional commands, we can install the pytest-retry plugin:

$ pip install pytest-retryOnce installed, we can use the @pytest.mark.flaky decorator to our tests to automatically retry tests. For example, this test will run up to 20 times:

import pytest

import random

@pytest.mark.flaky(retries=20)

def test_flaky():

if random.randrange(1,10) < 6:

pytest.fail("bad luck")In the spirit of “fail fast”, we can combine this with pytest --ff to rerun failed tests first.

Pytest-retry takes care of retries, but we Python developers have a tool specifically intended to find flaky tests with retry: pytest-flakefinder.

We can install the tool with:

$ pip install pytest-xdistThen, we can run test multiple times in parallel with:

$ pytest --flake-finder --flake-runs=20This will run each test 20 times and show a report at the end. A great way for quickly identifing flaky tests.

Java with Surefire

For Java projects using Maven, integrating retries requires adding specific plugins like the Maven JUnit and Surefire plugins.

First, we should add the most current JUnit version to our pom.xml:

<dependencies>

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-api</artifactId>

<version>5.10.2</version>

<scope>test</scope>

</dependency>

</dependencies>Next, we add a plugin into the build/pluginManagement/plugin section of the pom.xml. This enables the Maven Surefire Plugin:

<build>

<pluginManagement>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>3.2.5</version>

</plugin>

</plugins>

</pluginManagement>

</build>Now we can ask Maven to re-run failed plugins by adding surefire.rerunFailingTestsCount to the test command:

$ mvn -Dsurefire.rerunFailingTestsCount=20 testThis allows for configuring test retries directly in the Maven configuration, providing a systematic way to rerun failing tests a certain number of times.

Rust with NexTest

In Rust, while Cargo does not natively support test retries, extensions like cargo-nextest can be installed to add this functionality.

$ curl -LsSf https://get.nexte.st/latest/mac | tar zxf - -C ${CARGO_HOME:-~/.cargo}/binRunning cargo init should create the nextest config file .config/nextest.toml. We can customize the test behavior here:

[profile.default]

retries = { backoff = "fixed", count = 20, delay = "1s" }Now, in order to run test with retries we need to run cargo nextest instead of cargo test:

$ cargo nextest runWe can also specify the number of retries in the command line:

$ cargo nextest run --retries 10Configuring retries either via command-line arguments or configuration files allows developers to automatically rerun failed tests.

PHP with PHPUnit

[PHPUnit] has retry support out of the box. We only need to install the plugin with composer:

$ composer require --dev phpunit/phpunitThen, configure PHPUnit to look for tests in our test folder:

<phpunit bootstrap="vendor/autoload.php"

colors="true">

<testsuites>

<testsuite name="Application Test Suite">

<directory>tests</directory>

</testsuite>

</testsuites>

</phpunit>We can now use the repeat option to re-run failed tests:

$ ./vendor/bin/phpunit --repeat 10 We can re-run failed test first by adding --cache-result --order-by=depends,defects to the invocation:

$ ./vendor/bin/phpunit --repeat 10 --cache-result --order-by=depends,defectsThis will re-run test up to 10 times, cache the results and run failed tests first on the next test run.

Elixir with ExUnit

Elixir projects use [ExUnit] by default as the test runner. This framework does not provide a re-run functionality, however, it does a --failed switch:

$ mix test --failedThis option will re-run failed tests in the last execution. We can leverage it in a shell script to automatically re-run failed tests until they succeed or reach the maximum number of retries:

#!/bin/bash

# rerunner.sh: re-reun failed tests in Elixir

mix test

# retry up to 20 times

for i in {1..20}; do

echo "=> Re-running failed tests"

mix test --failed && break

doneWith this simple script we can emulate the retry behavior of other frameworks.

Go with GoTestSum

Go has a built-in test runner in the framework, which, unfortunately, does not support automatic retries. For that, we need to install [GoTestSum]():

$ go install gotest.tools/gotestsum@latestNow we can use gotestsum --rerun-fails like this:

$ gotestsum --rerun-fails --packages="./..."In the packages section we can list the packages to test or ./... to recursively search for test files in the project.

One problem you may encounter is that Go caches the build results, which can hide flakiness in the tests. In order to bypass the cache you can add -- -count to the command invocation. For example, to re-run 20 times the tests:

$ gotestsum --rerun-fails --packages="./..." -- -count=20This will efectively rebuild the binary each time the test runs.

Conclusion

Whether working in local or CI environments, the key is to strike a balance between identifying and fixing flaky tests with retry and not letting them undermine the overall confidence in your test suite.

Learn more about flaky tests: