Channable develops a SaaS solution for e-commerce companies to send their products or services to various marketplaces, comparison engines and affiliate platforms. The platform, created by the company of 100+ employees, has grown to be the number one product feed management platform in the Netherlands. The Channable team focuses on user experience, performance, and maximising the range of channel integrations available to serve users’ needs.

The Challenge

The Channable team’s workflow only permits merges to the master branch once the CI system has checked the merge commit. If another merge to master happens in the meantime, the results of other merge commit builds is invalidated. “Long wait times for CI are frustrating,” explains Robert Kreuzer, co-founder and CTO, “if it takes too long you go and do something else in the meantime, and when you remember to check your build, somebody else merged to master, so you need to start over.” In addition, long build times lead to more context switching, so for the sake of productivity the team was keen to speed up their CI/CD pipeline.

Channable was experiencing a couple of specific issues with their existing CI solution, Travis. First, wait times for jobs to start were long and there was no visibility on what was causing the delay. Secondly, Channable’s pipeline includes compiling Haskell code to native binaries, which is CPU intensive. The team found that builds on Travis machines were a lot slower than their own workstations.

The excessive waiting and pipeline execution times were brought sharply into focus in the context of production issues. From pushing a hotfix to deployment could easily take 20 minutes, which, as Robert highlights, “is a very long time for a critical bug to be in production.”

Overall, Channable needed their new CI/CD solution to

- Decrease wait times for queued builds

- Faster build machines for CPU-intensive compilation

- Fast, local caching for bandwidth-intensive git operations

The Solution

The Channable team surveyed a range of solutions including AppVeyor, Azure DevOps, BitBucket Pipelines, CircleCI, Cirrus, CodeShip, Concourse, GitLab CI, SourceHut builds. Availability of multi-core build machines and the flexible and easy-to-use caching stood out as differentiators in Semaphore’s favour.

“The biggest impact for us was the availability of bigger multi-core VMs,” says Robert. “Especially the Haskell compilation and our test suite could benefit a lot from the added parallelism.” Semaphore offers three flavours of Linux machine. Each job in a pipeline runs in a separate build-agent. For Channable’s CPU intensive Haskell compilation step, they chose the most powerful, 8-core variant to maximise speed.

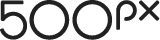

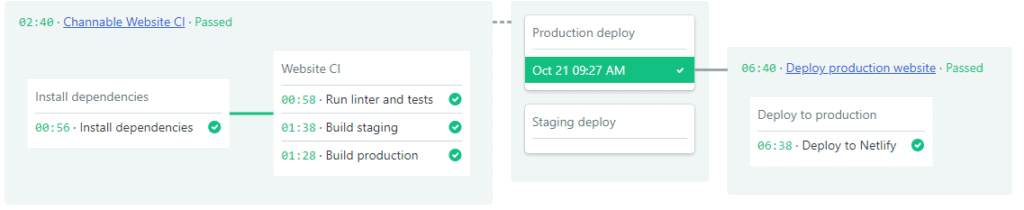

Further improvements over the old solution came via caching. The first type of caching that boosted performance is Semaphore’s local Git cache. This cache reduced time spent cloning git repos considerably. The second type of caching used in the workflow sped up Channable’s pipeline and reduced download traffic. Semaphore provides a cache for each and every project. The cache is available in every pipeline job. The Channable workflow stores infrequently changing dependencies after the first successful build of each day. Caching is available in every job out-of-the-box, so configuring the build this way was easy. “The Semaphore cache was even better than expected,” concludes Robert, “very fast and easy to use”.

The Results

Semaphore queues jobs to run in a pool of up to 50 build machines per organization, a ten-fold increase over Travis’ most powerful advertised plan. With more agents to run jobs, and more powerful agents in use for the biggest jobs, the new CI/CD solution paid off as anticipated. As Robert summarises, “The overall gain in performance compared to Travis had the expected result: We roughly cut the time from push to result in half.” A lot of the saving came from the high-performance build agent used for the CPU intensive Haskell compilation. Time for a full rebuild of the native binaries (with all dependencies in the cache) went from 30 min under Travis to about 10 min with Semaphore.

Overall, the team got the lead-time and productivity improvements they were looking for. “Time to ship (from merging a PR to the package being deployed) went down due to shorter wait times,” relates the CTO, “making deployments less disruptive to the overall workflow.”