LangChain is a new library written in Python and JavaScript that helps developers work with Large Language Models (or LLM for short) such as Open AIs GPT-4 to develop complex solutions.

Under the hood, its capabilities can be categorized into three primary domains:

- LangChain Introduces a unified API designed for seamless interaction with LLM and conventional data providers, aiming to provide a one-stop shop for building LLM-powered applications.

- A comprehensive toolkit that formalizes the Prompt Engineering process, ensuring adherence to best practices.

- Interaction with LangChain revolves around ‘Chains’. Simply put, they provide a way to execute a sequence of calls to LLMs and other components to generate a complex interaction.

We will demonstrate some of LangChain’s capabilities by developing a simple chat application that answers questions about the book “The Four Corners in California” by Amy Ella Blanchard, which we got from the Gutenberg project.

We selected this book because it resides in the public domain and currently falls outside ChatGPT’s coverage, allowing us to demonstrate our ability to generate answers based on custom data.

The tutorial relies on Open AI API services, and following this tutorial will incur some charges to your account. LangChain does support other providers, and while some of them may offer a free tier, we have not experimented with them, and their results might vary from the examples below.

The tutorial will consist of two parts:

- In the first part, we will parse the book content and generate “Vector Embeddings”, store them in a Redis database and demonstrate the similarity search capabilities provided by LangChain and Redis.

- The second part will include the chat implementation, which will accept user input, fetch the relevant data using Vector Similarity Search and pass the text to Open AI to generate a response.

Before we start

To follow this tutorial, you will need a local Python environment. We also recommend setting up a local Redis instance, though you may also use the free tier that Redis Cloud provides.

The full source is available in our Github repository, and you can follow the instructions provided to get started.

If you’ve not set up a Python environment before, we recommend following Google’s Python guide.

Once you’ve set your development enviroment you will need to install the following dependencies: pip install langchain pip install openai pip install redis pip install tiktoken

Finally, we recommend setting a local Redis instance using the official Docker image. Alternatively, set up a free account at Redis Cloud.

Part 1: Preparing The Data

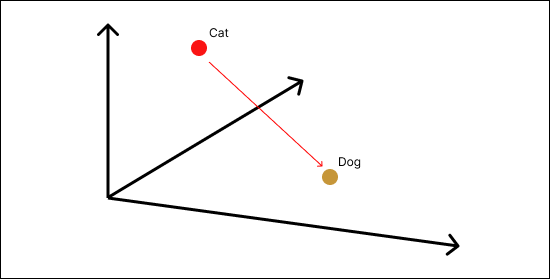

Searching plain text becomes increasingly complex when you venture beyond word or phrase matching. To address this challenge we will use a solution called Vector Similarity Search, which is often used alongside Large Language Models.

As the name suggests, Vector Similarity Search involves separating texts into chunks, turning each of them into a vector that is represented as an array of numbers. By placing these vectors in a multi-dimensional space we can determine the distance between two chunks of text.

If this sounds a little too abstract, head over to Google’s article – Find anything blazingly fast with Google’s vector search technology for a more in-depth explanation of vector search technology.

Heading back to our example, let’s look at the first part of the code, which follows the process we just described.

# data_parser.py

import os

from langchain.embeddings import OpenAIEmbeddings

from langchain.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores.redis import Redis

loader = TextLoader("thefourcorners.txt")

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=20)

docs = text_splitter.split_documents(documents)

# set your openAI API key as an environment variable

os.environ['OPENAI_API_KEY'] = "API_KEY"

# We will use OpenAI as our embeddings provider

embeddings = OpenAIEmbeddings()

rds = Redis.from_documents(

docs, embeddings, redis_url="redis://localhost:6379", index_name="chunk"

)The code has three parts:

- Reading the document – we use a LangChain document loader to load thefourcorners.txt, you can get a copy of the book directly from Gutenberg or form our code repository. It’s worth noting that while we use a simple text loader, LangChain supports multiple formats, including HTML, PDF, and CSV.

- Splitting the document – The book contains around 75k words, much too long for a single vector. We use LangChain’s RecursiveCharacterTextSplitter, which roughly breaks the text into paragraphs based on the length and overlap that we provided.

- Generating vectors and populating Redis – LangChain provides easy integration to several databases that support vector search, including Redis. We provide the split document, the OpenAI embedding model, and the URL of our Redis instance, and LangChain does the rest.

Using the Redis instance provided in Step 3, we can now search our documents using Vector Similarity Search.

The following code will return a list of vectors related to the question we provide in the example below.

results = rds.similarity_search("Where does mrs Ruan live")

You can experiment with the results using the vector_search.py example in the repository.

If we open our Redis instance and inspect the data we generated, we can see around 500 Hash documents with the following structure:

"doc:chunks:9187ce3bcb824084a56f7d25d4dec412" :{

"content_vector" : "u\\x05z<\\x93\\x14j9\\x17\\xc8\\xf9;\\xd..."

"content": "The related paragraph text."

"metadata": "{}"

}

As we can see LangChain creates a hash containing a binary representation of the vector, the associated text, and a metadata field that can be used when processing structured data such as product lists to filter our content based on tags (i.e., only perform vector similarity search for items tagged as books in Amazon)

A final note before we move to write the chat application, while the RecursiveCharacterTextSplitter provided by LangChain works well for generic documents, it lacks an understanding of the content structure, which can result in degraded results.

For example, using the RecursiveCharacterTextSplitter on an FAQ document can easily chunk multiple questions together or split an answer in the middle – reducing the likelihood of a correct match.

For production usage, you can either fine-tune RecursiveCharacterTextSplitter by providing which characters to split the text on (the default is [“\n\n”, “\n”, ” “, “”]), or by writing your own text splitter.

Writing the chat application

The core of our chat application uses LangChain’s RetrievalQA, a simple chain that answers questions based on the provided context.

We initialize the RetrievalQA with two properties, the large language model we chose to use and the Redis vector store.

import os

from langchain.vectorstores.redis import Redis

from langchain.embeddings import OpenAIEmbeddings

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

os.environ['OPENAI_API_KEY'] = "YOUR_API_KEY"

embeddings = OpenAIEmbeddings()

rds = Redis.from_existing_index(

embeddings, redis_url="redis://localhost:6379", index_name="chunk"

)

retriever = rds.as_retriever()

model = ChatOpenAI(

temperature= 0,

model_name= 'gpt-3.5-turbo',

)

qa = RetrievalQA.from_llm(llm=model, retriever=retriever)You might have noticed we load Redis in two parts; we initialize Redis using the index we previously defined and then set it as a retriever.

LangChain’s retrievers are generic interfaces that return documents given an unstructured query.

All that’s left is to pass the user questions to the newly created chain and wait for Open AI’s reply.

print("Ask any question regarding The Four Corners in California book:")

# keep the bot running in a loop to simulate a conversation

while True:

question = input()

result = qa({"query": question})

print (result["result"])Finally, we wrap the question/answer process in an infinite loop to simulate a chat.

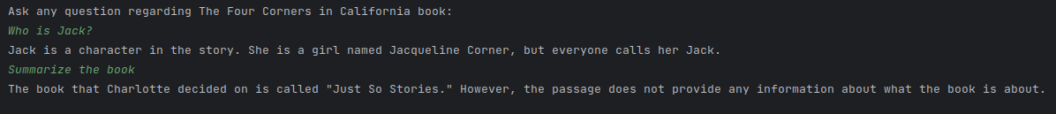

In the context of the book we provide in the demo, this solution can answer a wide array of questions, ranging from simple queries such as “Who is Jack” and more complex requests such as “Write a summary about Jack in 300 words.”

It’s worth noting that each LLM is limited to the context length it can accept. Only the top results are sent to OpenAI when we ask a question. As a result, broader questions such as “Summarize the book” will likely result in partial answers.

One last capability needs to be added to our chat before it’s complete.

While the application acts like a conversation, it still lacks one important ingredient – memory. While the application can answer individual questions well, it does not understand the context of the conversation and will fail to understand references to previous questions or answers.

To solve that, we need to switch from using RetrievalQA, which provides a one-shot interaction, to ConversationalRetrievalChain, which also maintains a conversation log.

LangChain offers several memory management solutions. For our chat, the simplest solution would be to use the ConversationBufferMemory, which stores the conversation in memory.

from langchain.chains import ConversationalRetrievalChain

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

qa = ConversationalRetrievalChain.from_llm(llm=model, retriever=retriever, memory=memory)

print("Ask any question regarding The Four Corners in California book:")

# keep the bot running in a loop to simulate a conversation

while True:

question = input()

result = qa({"question": question})

print(result["answer"])There are two changes in the code that are worth noting.

Initializing the ConversationalRetrievalChain works quite similarly to RetrievalQA. We need to initialize the preferred LangChain memory first and pass it as one of the parameters.

Sadly, one other change often surprises developers. While the execution process of both methods is similar, the returned object has different keys, and we must use result[“answer”] to access the response.

To Summarize

LangChain enables us to quickly develop a chatbot that answers questions based on a custom data set, similar to many paid services that have been popping up recently.

At the same time, LangChain is a relatively new library in constant flux, APIs are still changing, and care should be taken before using it in production.

The complete code for this tutorial is available at https://github.com/gnesher/mygpt.