When we decided to open source Semaphore, we knew it wouldn’t be just about flipping a switch and making the repo public. A lot had to change, especially how we test and manage contributions.

Our CI/CD pipeline had been fine-tuned over the years to work with our internal infrastructure. But if we wanted to support real-world use cases—developers deploying Semaphore on Google Cloud, AWS, or even their own machines—we needed something new.

We needed to validate that every release of Semaphore would run smoothly anywhere. That meant spinning up fresh infrastructure, installing the app, running end-to-end tests, and tearing it all down.

So we built ephemeral environments. This is how we did it—and how it ended up being a lot harder than I thought.

The Goal: From Prod-Centric to Portable

Our infrastructure had grown organically over a decade. Everything—our app config, testing setup, CI logic—was tailored to our exact environment.

Once we went open source, that was no longer acceptable. We had to prove that Semaphore could run cleanly in new environments, without the crutches of our internal setup.

So we set out to change the pipeline.

Here’s what the goal looked like:

- Package the app using Helm charts.

- Spin up a brand-new Kubernetes cluster.

- Install Semaphore using those charts.

- Run end-to-end tests.

- Tear it all down when we’re done.

Seems reasonable, right?

First Stop: Google Cloud

Since our production runs on Google Cloud, starting with GKE made sense. We already used Terraform for our infrastructure, so we figured we could just repurpose what we had. But it turns our that wouldn’t work at all.

The main issue with Terraform is that our config had 270+ resources, including a bunch of networking and security stuff we didn’t need for the Community Edition. It was testing overkill.

So we started from scratch. The minimal version included:

- A VPC (with default subnets)

- A Kubernetes cluster

- A node pool

- A resource for storing TLS certificates

- A static IP

Just five resources. That was a huge win.

We hooked up a Helm chart to install Semaphore, passed in a domain name, and used a Google Load Balancer to expose it.

Then I hit my first real snag.

The Load Balancer Problem

Semaphore’s installer pings itself via the public domain to verify the install worked. But the load balancer doesn’t exist yet when installation starts. So, the installation fails.

The DNS needs to point to something. The solution was to reserve a static IP during provisioning, point the domain to that IP in DNS, and then make sure the load balancer uses that reserved IP once it spins up.

It worked. Not elegant, but functional.

From Manual to CI

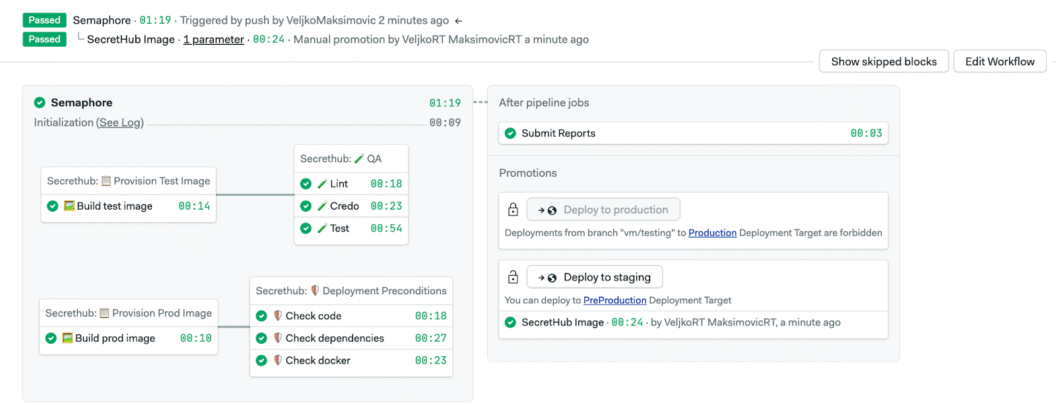

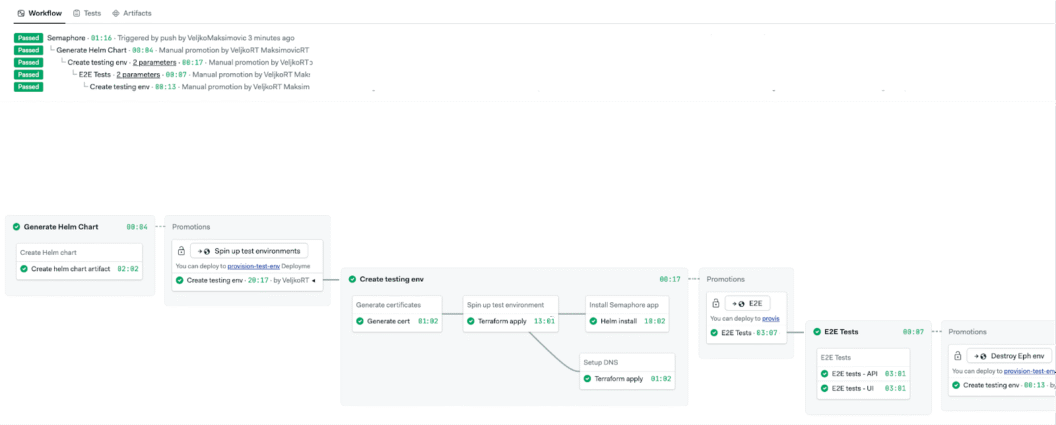

Once we had the GCP-based ephemeral environment working manually,weI turned it into a CI workflow:

- Generate certs

- Provision infrastructure with Terraform

- Install Semaphore with Helm

- Run end-to-end tests

- Destroy everything

Each step runs in its own CI job, and we pass data between jobs using artifacts. The flow was finally shaping up.

Time to try the same on AWS.

Then Came EKS

We assumed this would be a “copy/paste and rename” kind of situation. But, yet again, we found a lot of unforeseen problems.

Here’s what broke immediately:

- Persistent volumes didn’t work. Unlike GCP, EKS doesn’t come with default storage classes.

- Pods didn’t schedule. Turns out, AWS limits pods per node based on IP allocation via ENIs. We had to split into multiple nodes.

- Load balancers didn’t auto-provision. GKE handles this for you. EKS doesn’t—unless you install a separate controller.

- No static IPs or DNS reservations. You only get the DNS name after the LB is created.

So we added:

- AWS Load Balancer Controller (LB), to actually spin up the LB from our Ingress definitions

- External DNS, to update our DNS zone automatically when the LB is ready

The extra complexity was painful, but it finally mirrored the GCP setup.

Meanwhile, Someone Just Used a VM

While we were deep in Kubernetes land, a teammate spun up a minimal environment using a single VM and k3s.

It booted in 45 seconds. No load balancers. No controllers. No YAML debugging. It just ran.

We now use this approach for fast, local testing during feature development. When we need to validate against “real” infrastructure, we run the GCP and AWS ephemeral environments before a release.

What We Learned (the Hard Way)

Here’s what we’d tell our past selves before starting the project:

- Cloud services aren’t interchangeable. GCP and AWS might both offer “managed Kubernetes,” but the assumptions, defaults, and gotchas are wildly different.

- Strip it down. Start with the simplest working Terraform you can. Add features later.

- Expect weird infrastructure bugs. Load balancer DNS, pod scheduling limits, and missing storage classes—these took the most time to figure out.

- Use automation to your advantage. CI pipelines, Terraform, Helm—once wired together, they save hours of repeat work.

Where We Are Now

Today, every version of Semaphore goes through:

- Lightweight CI validation in a single-VM k3s setup

- Full end-to-end tests in GCP and AWS-based ephemeral environments

And everything is triggered automatically in CI.

What used to be a manual process tied to our infrastructure is now a portable, repeatable, and testable flow.

It wasn’t the easiest migration, but it was one of the most important things we’ve done for open-sourcing Semaphore.

If you’re working on something similar—or thinking about building ephemeral environments—feel free to drop by our Discord server or check out the repository. We’re always happy to chat.