Introduction

In this tutorial, we will learn how to run a Java Play application as a Docker container.

Docker is a software containerization platform. It uses containers instead of hypervisors for a number of reasons. First of all, containers are lightweight and they have a lower memory footprint. Secondly, containers virtualize the operating system while hypervisors virtualize the hardware, so, in addition to being lightweight, containers are also more efficient. Containers also have access to the host kernel, while with hypervisors a complete operating system is created with a new kernel.

First, we will create what is called an image of the application we want to deploy, and every time we run this image, we will essentially be creating a Docker container. We can create as many containers of the same image as we want.

With Docker, we can mirror the production environment on any host operating system. This is how Docker achieves the much needed portability.

What we will do first is create a working Java Play web application, install Docker, set up Docker for use, and finally create a runtime environment within it with our own specifications.

Then, we will build an image of the application and run it in a container.

At the end of this tutorial, you will have a basic understanding of Docker and running a Java Play application as a Docker container.

Why Docker?

In this section, we will explore reasons why it is in our best interest as developers to understand and use Docker. Adding Docker to a project lifecycle is like working through an exam paper prior to the official exam day. By the time you sit for the exam, you have all the solutions worked out, and you don’t even have to think through them any more.

Docker creates isolated environments in the form of containers that are consistent with the production environment, which eliminates unforeseen deployment issues.

This way, applications have greater portability during development. It’s as though containerized applications move with their environment setup wherever they are deployed.

You could argue that traditional Virtual Machines offer the same capability, and that there are several other tools such as Puppet that already do this. However, Docker beats all other tools in resource efficiency.

Every time a container is created in Docker, it has direct access to the host machine and its resources. Docker containers are lightweight. When you start a container, it loads within a second or less since it doesn’t have to boot its own operating system. This is different from virtual machines, which need to boot an OS when you start them, which takes several minutes.

Prerequisites

To be able to successfully follow this tutorial, you need to ensure the following setup exists on your system:

- Play framework 2.5.6. Make sure to download the 1 MB streamy distribution which ships with Typesafe Activator 1.3.10. This works for Windows, Linux and Mac. Add

/bin/activator.batto your PATH variable in Windows control panel. - Docker installed on your machine. This link provides instructions for installation on Windows 7/8 which we use in this tutorial. It will create a quick start terminal available on the desktop. For those using Windows 10, you can install it natively by following this link, in which case the commands will be available from any terminal.

- Bash (Only for Windows 7/8). Since Docker uses a Linux runtime, you need to have bash.exe available on your system. If you have git or cygwin already installed, you can skip this step. You will just point Docker to the existing bash.exe location.

- Java installed on your machine. You need to have a Java runtime on your system to be able to run a Play application.

Creating and Running a Java Play Application

By the end of this section, we will have a running Java Play application ready to deploy in a Docker container.

We will use Typesafe Activator for this purpose. One thing to note is that we downloaded a thin activator distribution, so for every command we run, the Activator will download all the necessary dependencies to fulfill the command.

Activator is a software build tool that inherits the Scala Build Tool (SBT). SBT is the default tool for building Scala applications, just like Maven is for Java applications. Since Play is built in Scala, we use SBT for building Play applications. Activator does all SBT does and more.

First of all, Activator has a library of templates for the projects it supports, so that you don’t have to create one from scratch. Secondly, it has a Web UI if you’re not too comfortable with the CLI. You can still create, run, test, and monitor your applications from the Web UI.

Next time we re-run the command, there will be no further downloads as all the dependencies needed will already be available on our system. So, let’s power up Windows command prompt and create a new Java Play application:

activator new docker-play play-java

The new option tells activator to create a fresh application in the current directory. The name of the new application is docker-play, and play-java is the template which Activator will use to create the application.

Activator has several templates for different application types.

Upon completion, the directory structure of docker-play should look like this:

+---docker-play

| +---app

| | +---controllers

| | +---views

| +---conf

| +---logs

| +---project

| | +---project

| | +---target

| |

| +---public

| | +---images

| | +---javascripts

| | +---stylesheets

| +---target

| |

| +---test

Let’s move into the new project directory and run the application by executing the following commands:

cd docker-play

activator run

We should now see the following output:

--- (Running the application, auto-reloading is enabled) ---

[info] p.c.s.NettyServer - Listening for HTTP on /0:0:0:0:0:0:0:0:9000

(Server started, use Ctrl+D to stop and go back to the console...)

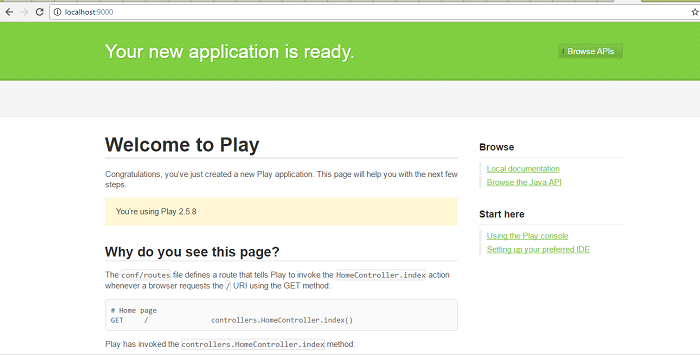

We can now load http://localhost:9000 in the browser, and we’ll see the following output:

We have successfully created and run a Java Play application, and are now ready to dockerize it. We can stop it by typing CTRL+D at the command prompt, so that port number 9000 is free for the next steps.

Setting Up Docker

Starting the Terminal

We can start Docker by launching the quick start terminal, which should be available on our desktop after installation.

If the terminal process is not automatically recognizing the bash executable from the installation of babun. The first time you run it, you may have to browse to the ‘bash.exe’ location when prompted.

To test if everything is working fine, run docker --version from the prompt, and you should get an output like this:

Docker version 1.12.0, build 8eab29e

Creating and Configuring a Virtual Host

Docker Machine is currently the only way to run Docker on Mac or Windows.

Windows and Mac computers have to use a virtualization layer (eg. Virtual Box) to be able to run Docker. Installing Docker Machine makes this possible by provisioning a local virtual machine with Docker Engine, giving you the ability to connect it, and run Docker commands.

With Docker Machine, we can create any number of virtual hosts we need. Each host is allocated its own virtual resources, such as memory and hard disk space.

A virtual host should ideally be created to mirror the resources available on the production server. This is because our container will run from inside this host. Let’s create one for the new application:

docker-machine create development --driver virtualbox

--virtualbox-disk-size "5000" --virtualbox-cpu-count 2

--virtualbox-memory "4096"

We have created a host called “development”, with 5GB hard disk size, 2 CPUs and 4GB memory.

Later, when we will be accessing our containerized application from the browser, we will need the IP address of this host, so we need to retrieve it now and take not of it:

docker-machine ip development

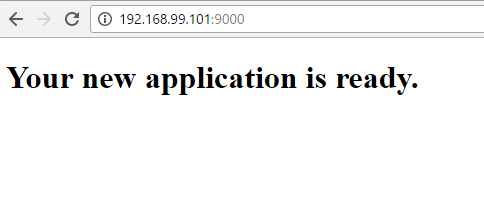

In our case, the IP address is 192.168.99.101. To complete the setup, we need to add some environment variables to the terminal session to allow the Docker command to connect the host we have just created.

Docker Machine provides a simple way to generate the environment variables and add them to the session:

$ docker-machine env development

Then, we run the command at the console output:

eval ("C:\Program Files\Docker Toolbox\docker-machine.exe" env development)

By default, Docker Machine sets up a virtual host for us. This is where our containers will run if we don’t create a virtual host with our own attributes. Since we’ve created one in the previous step, we can use Docker Machine to SSH into it instead of using the default virtual host:

docker-machine ssh development

Shared File System

As we are using an additional virtualization layer, we can only use the resources of the host operating system which is Windows in our case. Docker Machine automatically shares part of the host’s file system. Therefore, we are able to use C:/Users/currentuser inside our containers, and any files or directories in that location are available to us.

This means that we need to move our project or create a new one at this location. The end result is having a Java Play project at C:/Users/currentuser/docker-play.

To access this project from inside the container in the Docker console, we must cd to it as follows:

cd /c/Users/currentuser/docker-play

Notice how the Windows path is mapped onto the equivalent Unix path in the Docker container.

Creating a Dockerfile

We are now ready to build an image of our application to make it portable across different runtime environments. Assembling an image involves running a series of commands from the machine’s command line in succession.

However, this process would be tedious and error prone. A Dockerfile is a text file in which we write all the necessary commands for building an image.

Later, we can create an automated build by running docker build, and all these commands will be executed, resulting in an image.

To write a Dockerfile, we follow syntax which can be best understood from the official Docker reference page.

Since we are running a Java application, we need to set up JDK in the container. We will use the JDK8. A Dockerfile needs to start with the FROM command, which tells Docker which base image we are using.

So, let’s say we want to create a Docker image based on Ubuntu with JDK8 installed:

FROM ubuntu

RUN echo oracle-java8-installer shared/accepted-oracle-license-v1-1 select true | sudo /usr/bin/debconf-set-selections

RUN apt-get install -y oracle-java8-installer

First, we get the Ubuntu image using FROM. To pass any valid Unix commands to the container, we use RUN. The Java installer requires us to accept a license at the beginning of the installation process. The next line automates this for us.

Finally, we start the installation. The -y flag runs the installation in a non-interactive mode, giving a yes answer to every question.

With the steps above, we created a custom image. Alternatively, we could’ve used an official, community-maintained image. These images usually follow strong guidelines, and are maintained by the people who are closely associated with the software inside it. However, it’s good to develop a habit for making custom images, because sooner our later, you will want to tailor the environment to your specific needs.

The most common community image for JDK is openjdk, therefore we could have replaced the above set of commands with a single one:

FROM openjdk:8

Next, we will create an environment variable on the host to reference a path we will use through out the build:

ENV PROJECT_HOME /usr/src

We installed activator on the host machine, but remember that the container has to bundle everything it needs at the time of building. Therefore, we need another instance to be available inside the build context. We will create a directory to house this instance of the Activator. For simplicity’s sake, we’ll call it activator.

In addition to this, we need a directory to house our project context inside the container. We’ll call it app. Let’s go ahead and create these two directories:

RUN mkdir -p $PROJECT_HOME/activator $PROJECT_HOME/app

We now need to download activator for the build. Before that, we will switch the working directory to activator:

WORKDIR $PROJECT_HOME/activator

RUN wget http://downloads.typesafe.com/typesafe-activator/1.3.10/typesafe-activator-1.3.10.zip && \

unzip typesafe-activator-1.3.10.zip

With the above commands, we will get the activator and unzip it inside the $PROJECT_HOME/activator directory. We then need to make the project binaries available to the container by adding them to $PATH:

ENV PATH $PROJECT_HOME/activator/activator-dist-1.3.10/bin:$PATH

Later on, we will see how to run the project in production mode without building a full distribution by using Play’s activator clean stage command. It cleans the project and compiles it, fetching all required dependencies and placing them under $PROJECT_WORKPLACE/build/target/universal/stage.

Additionally, it adds a bin folder with a startup script inside it that with the same name as the project, in our case docker-play.sh.

In that case, we will have to add the expected path to this script to PATH variable:

ENV PATH $PROJECT_WORKPLACE/build/target/universal/stage/bin:$PATH

Next, we will copy all the project files to their new home in the container, app:

COPY . $PROJECT_HOME/app

We’ll now switch directory to app, so that by the time we are executing activator run in the container, we are at the project root:

WORKDIR $PROJECT_HOME/app

Finally, we expose the port 9000 of the container which will be used by our Play application for communication.It will use Docker to communicate to the rest of the world:

EXPOSE 9000

The final Dockerfile looks as follows:

FROM ubuntu

RUN echo oracle-java8-installer shared/accepted-oracle-license-v1-1 select true | sudo /usr/bin/debconf-set-selections

RUN apt-get install -y oracle-java8-installer

ENV PROJECT_HOME /usr/src

RUN mkdir -p $PROJECT_HOME/activator $PROJECT_HOME/app

WORKDIR $PROJECT_WORKPLACE/activator

RUN wget http://downloads.typesafe.com/typesafe-activator/1.3.10/typesafe-activator-1.3.10.zip && \

unzip typesafe-activator-1.3.10.zip

ENV PATH $PROJECT_HOME/activator/activator-dist-1.3.10/bin:$PATH

ENV PATH $PROJECT_WORKPLACE/build/target/universal/stage/bin:$PATH

COPY . $PROJECT_HOME/app

WORKDIR $PROJECT_HOME/app

EXPOSE 9000

Building Project Image

Since we are done with creating our Dockerfile, we are now ready to build an image of the Play application from it. This image will be a self-contained snapshot of our application with all its files, environment settings, and dependencies.

The image can actually be moved around as is from one system to another, or shared with the rest of the world. Let’s run the following command from the project root, where the Dockerfile is located:

docker build -t "egima/docker-play" .

The -t flag is used to tag images, ours is "egima/docker-play". Do not forget the last period at the end that specifies the context of the build as the current directory. The context is where Dockerfile is located, along with all the project resources.

When the above command finishes executing, we should see successfully built idxxxxx at the end of the console output. The string idxxxxx corresponds to the ID of the built image. When we run the following command:

docker images

Our new images egima/docker-play should be listed along with others.

Running Project Container

We are now ready to run this image in a container. An image in Docker can be compared to a class in Java, and a container can be compared to an object of the class.

The image defines all the project dependencies, environment settings, and resources, while a container is a single instance of the image. Therefore, we are able to run several containers of the same image.

Run in Development Mode

Let’s run a container of our Play application image with the following command:

docker run -i -p 9000:9000 egima/docker-play activator run

The -i flag runs our container in interactive mode so that we can observe the logs in case a problem arises. In other words, it leaves the container in the fore ground other than running it as a daemon in the background. The -p flag maps ports between the host and the containers.

With the 9000:9000 option, we map the port 9000 on the container to port 9000 on the host machine. This port should be open and not bound to the host. Otherwise, we will get an error.

We follow this with the name of the image on which we are running the container. The last part of the command is the command we would like to execute inside the container, which in our case is activator run.

At this point, our command for running the container or the Play application image is ready to be executed. After execution, the console process should be blocked. The output should be the same as the output we get when we run the Play application normally on the host machine:

--- (Running the application, auto-reloading is enabled) ---

[info] p.c.s.NettyServer - Listening for HTTP on /0:0:0:0:0:0:0:0:9000

(Server started, use Ctrl+D to stop and go back to the console...)

We are now ready to load the Play application from the browser using the URL http://196.168.99.101:9000.

Run in Production Mode

When running in development mode, every time a request is made from the browser, Play checks with SBT for any changes, compiles them and then serves the application.

This may have a significant impact on the performance of your application. If you’d like the application to load fast, this subsection is going to help.

Before you run your application in production mode, you need to generate an application secret. This is to avoid all sorts of attacks on web applications. If Play does not find a secret key during a run operation, it will throw an error.

You can create one by going to the application.conf file located in /conf at the root of your application. Look for the following line: play.crypto.secret = "changeme". If Play finds that the secret key has not been changed from the default changeme, it will throw an error.

For simplicity’s sake, we can change it to 0123456789.

Now run the staging command:

docker run -i -v /c/Users/currentuser/:/root/ egima/docker-play activator clean stage

The command activator clean stage runs a production server in place. We use it when we don’t want to create a full distribution, but we want to run our application from the project’s source directory.

This command cleans and compiles your application, retrieves the required dependencies, and copies them to the target/universal/stage directory. It also creates a bin/<start> script where <start> is the project’s name in our case being docker-play.sh. The script runs the Play server on Unix style systems and there is also a corresponding bat file for Windows.

The -v flag is used to map a host directory onto a virtual path in the container. We will thoroughly explain why we do this in the next section.

We should now have a distributable artifact inside the /target/universal/stage directory with the startup script inside bin, and already on $PATH.

Note that every string that comes at the end of the image name in docker run command is taken as a command to be executed inside the container. Now, let’s execute the startup script of our application, docker-play:

docker run -i -p 9000:9000 egima/docker-play docker-play -Dplay.crypto.secret = 0123456789

Notice how we pass the script name as well as the application secret key. We don’t need to mount the dependency paths any more since activator clean stage already fetched all the dependencies during its compile stage.

When we load http://192/168.99.101:9000, we should now see a production mode page without any unnecessary HTML:

Known Issues During Run

In this subsection, we’ll explore some issues. The Play framework is built in Scala and therefore uses Scala Build Tool (SBT) to build the application.

Unresolved Dependencies

During the run process, SBT may fail to download some dependencies or simply not find them. This is a common issue, which can be solved by tweaking the dependency resolution in one way or another.

SBT looks for dependencies in the same repositories all other Java applications use, such as Maven and Sonatype. However, sometimes it fails to fetch from these repositories due to some issues.

The solution we will explore here, should you run into this problem, is to manually add some repositories to the SBT build files.

Go to the project root and open build.sbt. Append the following resolvers to ithat file, and add the same to /project/plugins.sbt:

resolvers ++=Seq(

Resolver.sonatypeRepo("public"),

Resolver.mavenLocal,

"Apache Repository" at "https://repository.apache.org/content/repositories/releases/"

)

We have added Sonatype, Apache and the local Maven repositories to the build path of SBT. SBT will resolve Resolver.mavenLocal to the location of the local Maven repository. On Windows, this is C:/Users/currentuser/.m2.At this point, you can run the container again and see if the process has been successful before proceeding.

Unresolved Maven2 Path

Sometimes, the resolved path for Maven is not mapped accurately to the location of the local repo. Most times, SBT resolves this path to /root/.m2, which is not available in Windows, and may not map to any of the shared paths. This will result in the directory not found failure message.

We can fix this by mounting the shared folder C:/Users/currentuser/ to the directory where the container already looks for the Maven repository. We can achieve this by modifying the run command as follows:

docker run -v /c/Users/currentuser/.m2:/root/.m2 -i -p 9000:9000 egima/docker-play activator run

You can try the above command before proceeding to the last issue.

Missing Dependencies In M2

SBT is now able to see the path to the local Maven repository, but the dependency is still not found in there. This should be evident in the failure logs on the console when the run is unsuccessful. There will be a specific portion similar to the one below:

==== Maven2 Local: tried

file:/root/.m2/repository/org/sonatype/sisu/sisu-guice/3.1.0/sisu-guice-3.1.0.jar

==== Apache Repository: tried

https://repository.apache.org/content/repositories/releases/org/sonatype/sisu/sisu-guice/3.1.0/sisu-guice-3.1.0.jar[0m

::::::::::::::::::::::::::::::::::::::::::::::

:: FAILED DOWNLOADS ::

:: ^ see resolution messages for details ^ ::

::::::::::::::::::::::::::::::::::::::::::::::

:: org.sonatype.sisu#sisu-guice;3.1.0!sisu-guice.jar

::::::::::::::::::::::::::::::::::::::::::::::

If this happens, we’ll need to identify the missing dependencies, and add them manually to the local Maven repository by following the official Apache Maven tutorial.

Conclusion

In this tutorial, we learned what Docker is, how it works and why we as software developers should consider using it. We created a Java application, demonstrated how to dockerize it, and resolved some common build issues.

If you have any questions and comments, feel free to leave them in the section below.

P.S. Want to continuously deliver your applications made with Docker? Check out Semaphore’s Docker support.

Read next: