Continuous Integration (CI) is a software engineering practice in which we test our code on every update. The practice creates a strong feedback loop that reveals errors as soon as they are introduced. Consequently, we can spend more of our time coding features rather than hunting bugs. Running Microservice architecture is no different, in this article we’ll focus on building and deploying Microservice Spring Boot application.

Once we’re confident about the code, we can move on to Continuous Delivery (CD). Continuous Delivery goes through all the steps that make the release so each successful update will have a corresponding package—ready for deployment.

I believe that practice is the best school. That is why I’ve prepared a demo project that you can practice on. As you follow the steps, you’ll learn how CI/CD can help you code faster and better.

Preparing a Microservice Java for Docker

Getting ready

Here’s a list of the things you’ll need to get started.

- Your favorite Java development editor and SDK.

- A Docker Hub account and Docker.

- A GitHub account and Git.

- Curl to test the application.

Let’s get everything ready. First, fork the repository with the demo and clone it to your machine.

This is the project’s structure:

The application is built in Java Spring Boot and it exposes some API endpoints. It uses an in-memory H2 database; that will do for testing and development, but once it goes to production we’ll need to switch to a different storage method. The project includes tests, benchmarks and everything needed to create the Docker image.

These are the commands that we can use to test and run the application:

$ mvn clean test

$ mvn clean test -Pintegration-testing

$ mvn spring-boot:runOnce the app is started, you can create a user with a POST request:

$ curl -w "\\n" -X POST \

-d '{ "email": "wally@example.com", "password": "sekret" }' \

-H "Content-type: application/json" localhost:8080/users

{"username":"wally@example.com"}With the user created, you can authenticate and see the secured webpage:

$ curl -w "\n" --user wally@example.com:sekret localhost:8080/admin/home

<!DOCTYPE HTML>

<html>

<div class="container">

<header>

<h1>

Welcome <span>tom@example.com</span>!

</h1>

</header>

</div>You can also try it with login page at:

http://localhost:8080/admin/home

The project ships with Apache Jmeter to generate benchmarks:

$ mvn clean jmeter:jmeter

$ mvn jmeter:guiA Dockerfile to create a Docker image is also included:

$ docker build -t semaphore-demo-java-spring .CI/CD Workflow

In this section, we’ll examine what’s inside the .semaphore directory. Here we’ll find the entire configuration for the CI/CD workflow.

We’ll use Semaphore as our Continuous Integration solution. Our CI/CD workflow will:

- Download Java dependencies.

- Build the application JAR.

- Run the tests and benchmark. And, if all goes well…

- Create a Docker image and push it to Docker Hub.

But first, open your browser at Semaphore and sign up with your GitHub account; that will link up both accounts.

Click on the + Create new link to add your repository to Semaphore:

Choose your repository:

The repository has a sample CI/CD workflow, so when prompted, select I will use the existing configuration.

Continuous Integration for Microservice Spring Boot

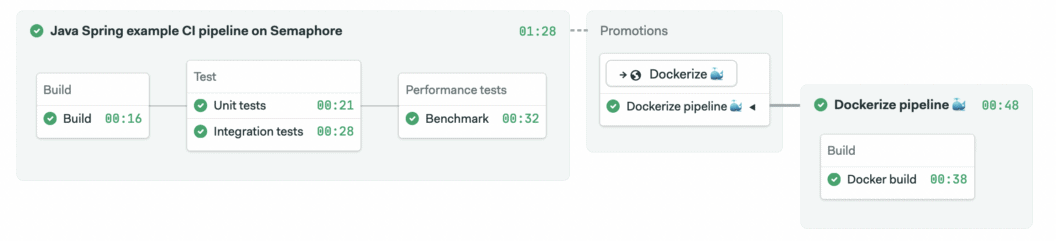

Semaphore will show the CI/CD pipelines as soon as you make a push to GitHub:

$ touch some_file

$ git add some_file

$ git commit -m "add Semaphore"

$ git push origin masterClick on the Edit Workflow button on the top right corner to view the workflow builder.

Let us examine the CI pipeline.

Name and agent: Each pipeline has a name and an agent. The agent is the virtual machine type that powers the jobs. Semaphore offers several machine types, we’ll use the free-tier plan e1-standard-2 type with an Ubuntu 20.04.

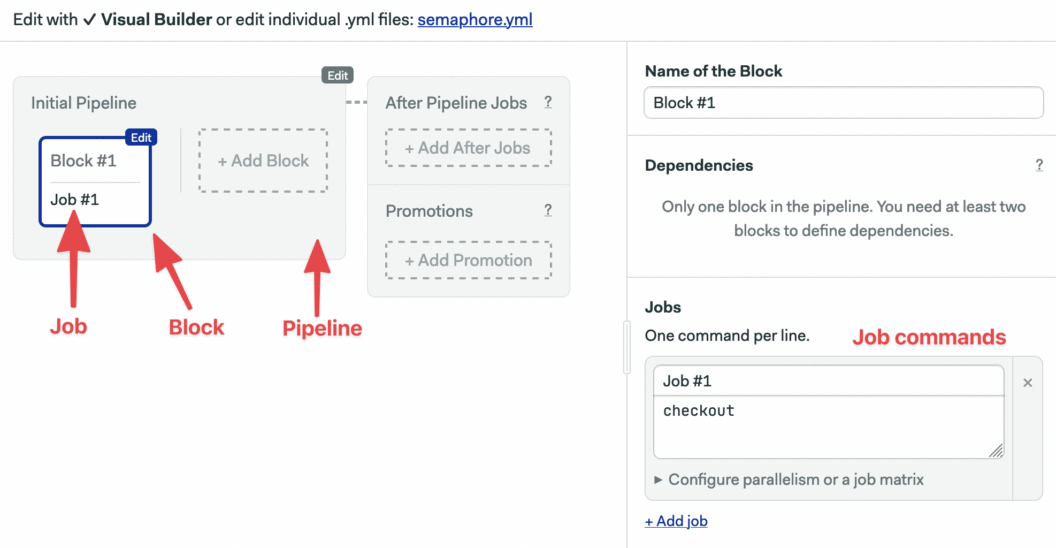

Blocks and Jobs: Jobs define the commands that give life to the CI/CD process. Jobs are grouped in blocks.

Jobs in a block run concurrently. Once all jobs in a block are complete, the next block begins.

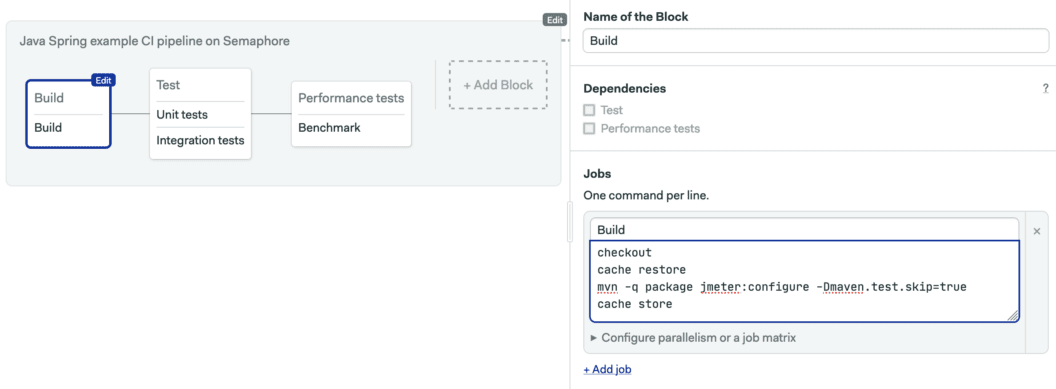

Click on the “Build” block to view its job:

The “Build” job downloads the dependencies and builds the application JAR without running any tests:

sem-version java 17

checkout

cache restore

mvn -q package jmeter:configure -Dmaven.test.skip=true

cache storeThe block uses checkout to clone the repository and cache to store and retrieve the Java dependencies.

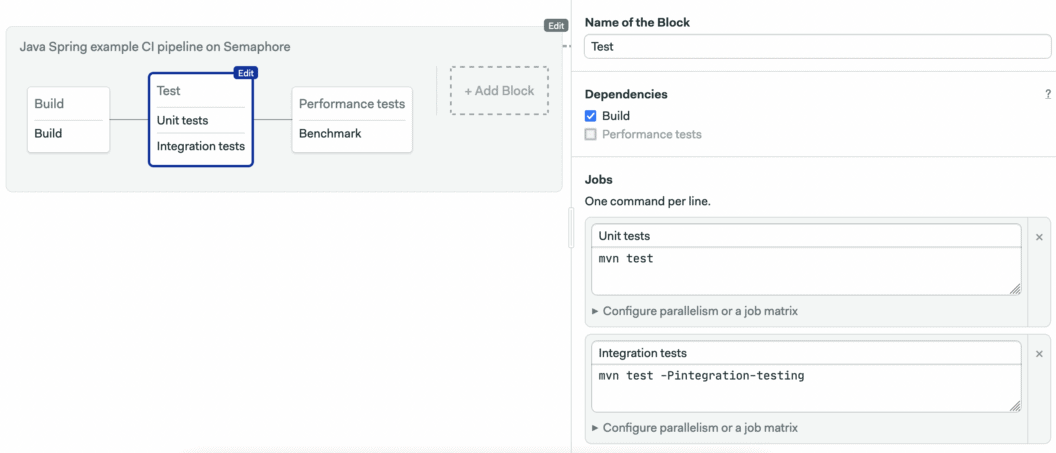

The “Tests” Block has two jobs:

Commands that we put in the prologue run before each job in the block. This is where we conventionally put common set up commands:

sem-version java 17

checkout

cache restore

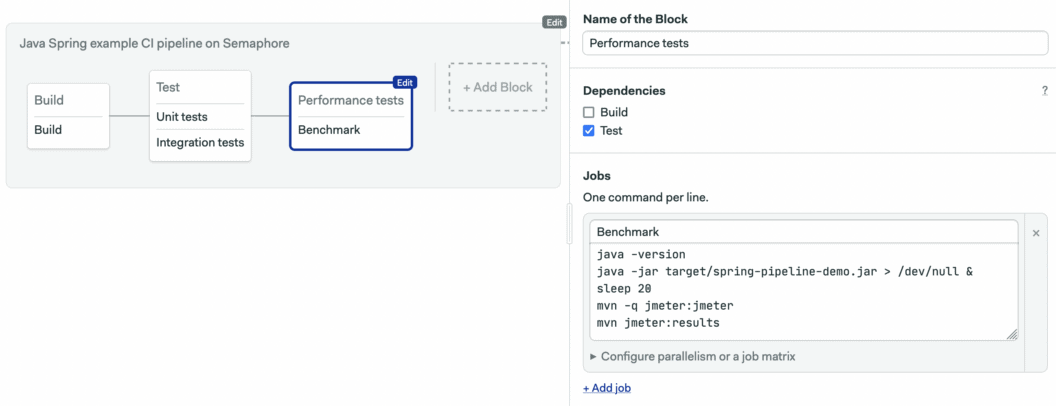

mvn -q test-compile -Dmaven.test.skip=trueThe third block runs the benchmarks:

The Benchmark job starts the application in the background and measures its performance with Apache JMeter:

sem-version java 17

java -jar target/spring-pipeline-demo.jar > /dev/null &

sleep 20

mvn -q jmeter:jmeter

mvn jmeter:resultsStore Your Docker Hub Credentials on Semaphore

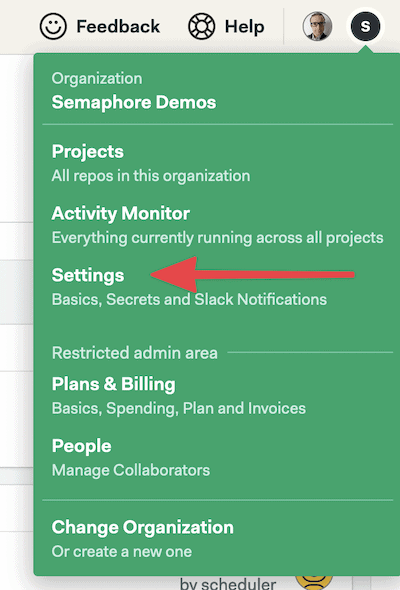

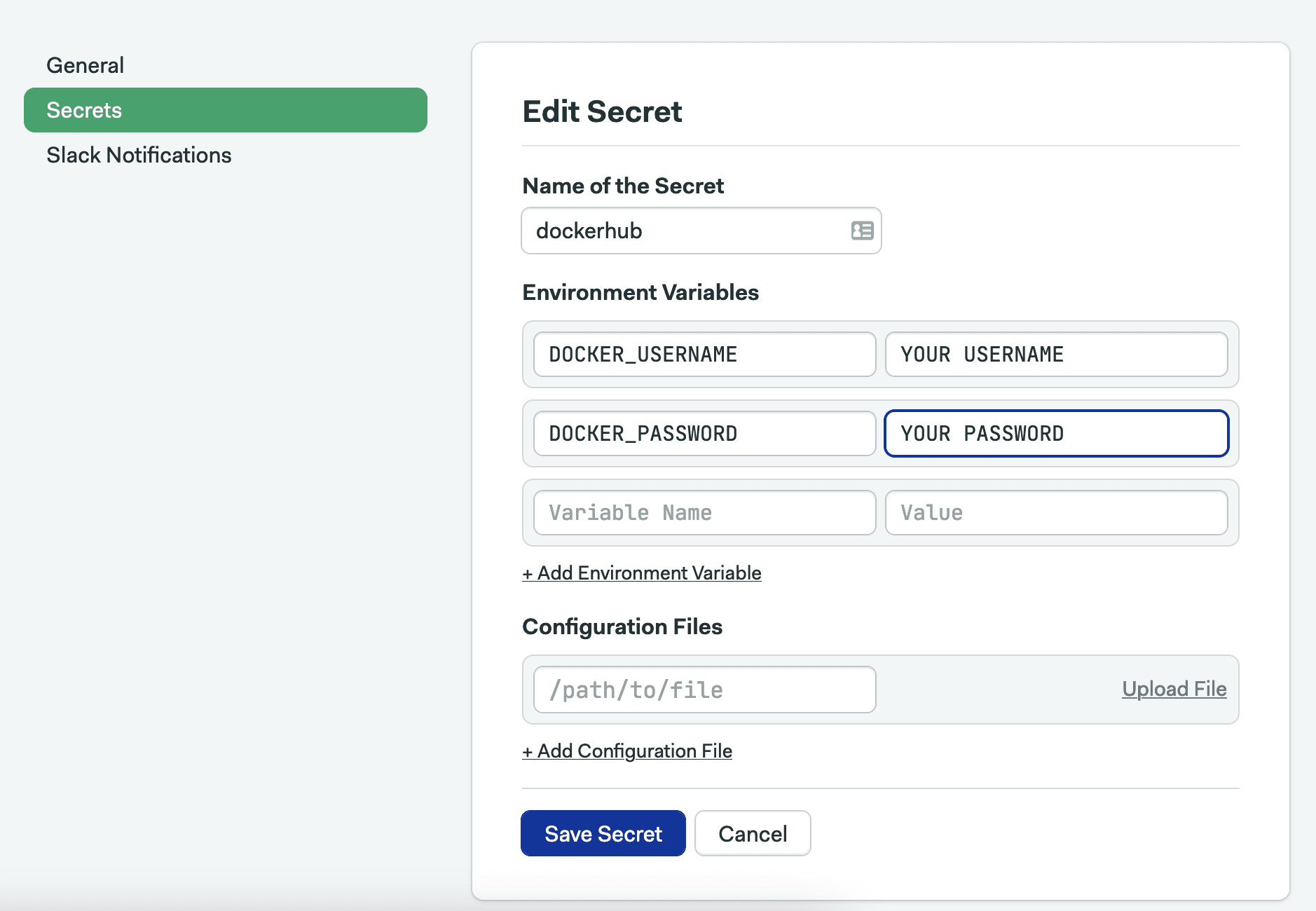

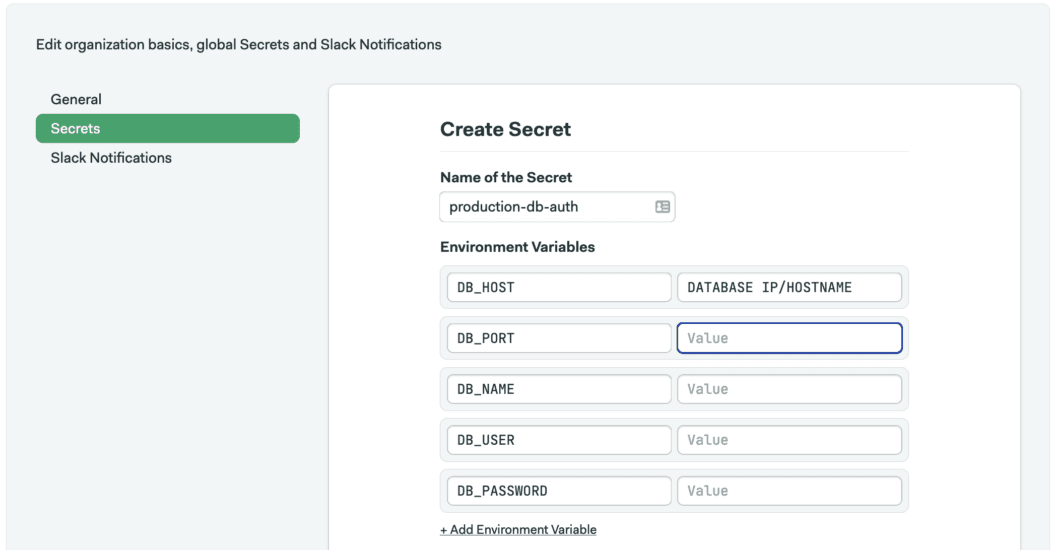

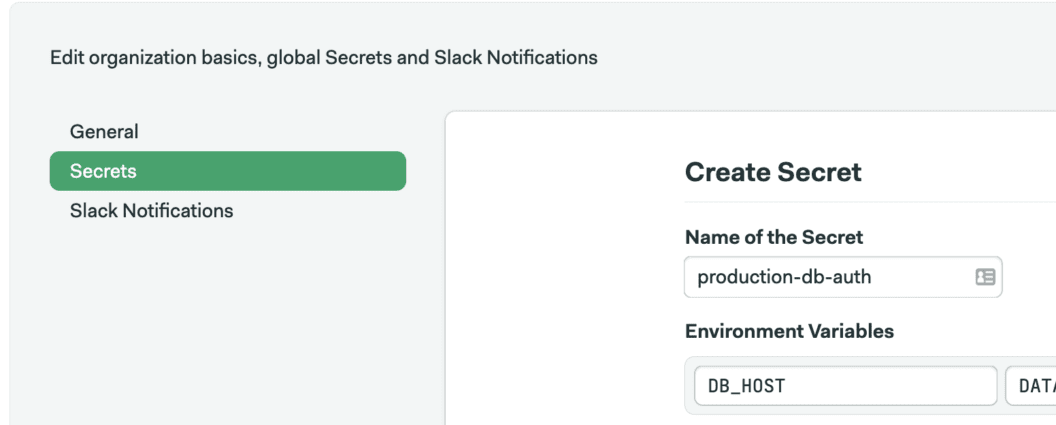

To securely store passwords, Semaphore provides the secrets feature. When we reference a secret in a job, it’s automatically decrypted and made available.

Create a secret with your Docker Hub username and password. Semaphore will need that information to push images into your repository:

- Under Account menu click on Settings.

- Click on Secrets.

- Press the Create New Secret button.

- Create a secret called “dockerhub” with your username and password:

Continuous Delivery

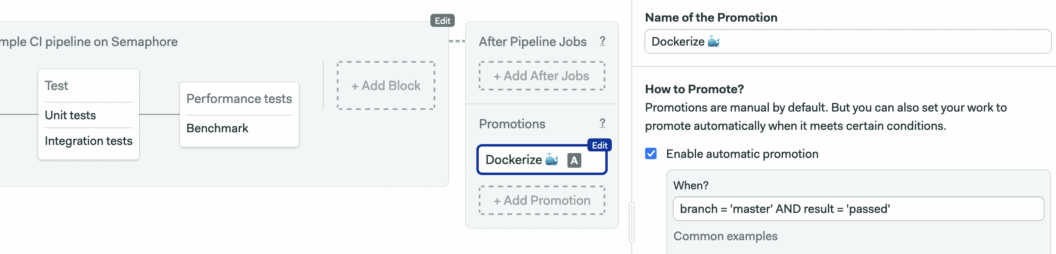

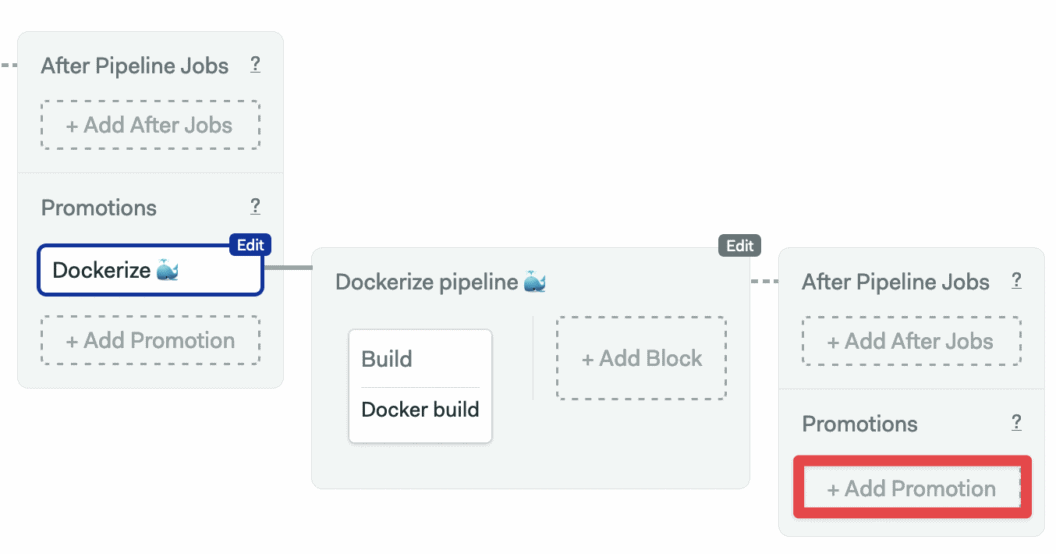

Next to the benchmark block, we find a promotion that connects the CI and the “Dockerize” pipelines together. Promotions connect pipelines to create branching workflows. Check the Enable automatic promotion option to start the build automatically.

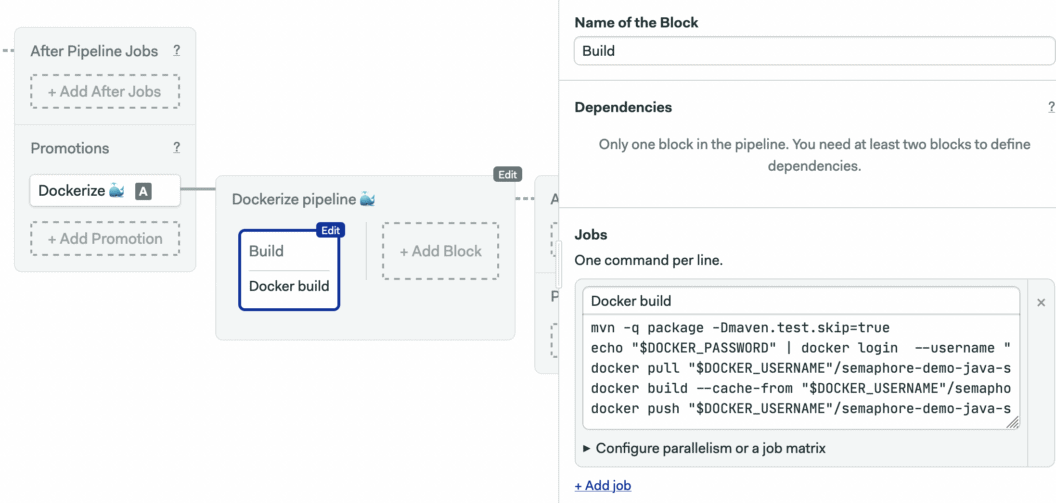

The demo includes a Dockerfile to package the application into a Docker image:

FROM openjdk:17-jdk-alpine

ARG ENVIRONMENT

ENV ENVIRONMENT ${ENVIRONMENT}

COPY target/*.jar app.jar

ENTRYPOINT ["java","-Dspring.profiles.active=${ENVIRONMENT}", "-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]The “Dockerize” pipeline has a single job to build and push the image to Docker Hub:

The job builds the image by:

- Login in to Docker Hub.

- Pulling the “latest” image

- Building the new image.

- Pushing the image

mvn -q package -Dmaven.test.skip=true

echo "$DOCKER_PASSWORD" | docker login --username "$DOCKER_USERNAME" --password-stdin

docker pull "$DOCKER_USERNAME"/semaphore-demo-java-spring:latest || true

docker build --cache-from "$DOCKER_USERNAME"/semaphore-demo-java-spring:latest \

--build-arg ENVIRONMENT="${ENVIRONMENT}" \

-t "$DOCKER_USERNAME"/semaphore-demo-java-spring:latest .

docker push "$DOCKER_USERNAME"/semaphore-demo-java-spring:latestTesting the Docker image

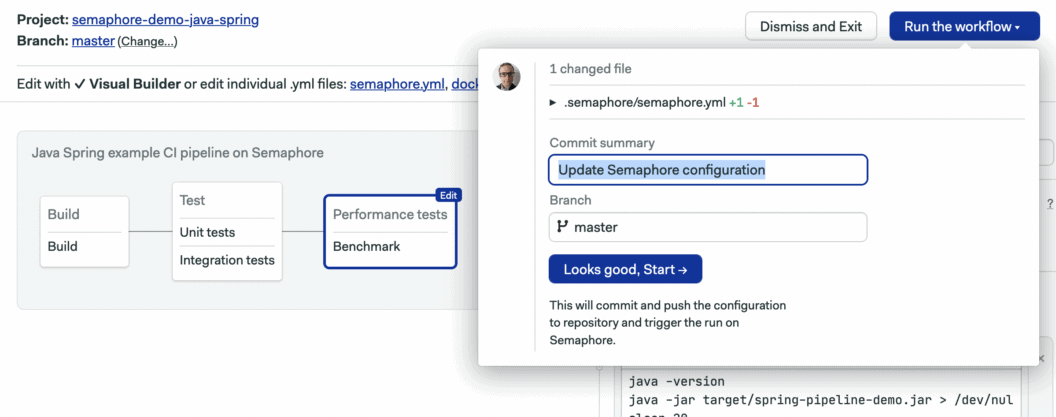

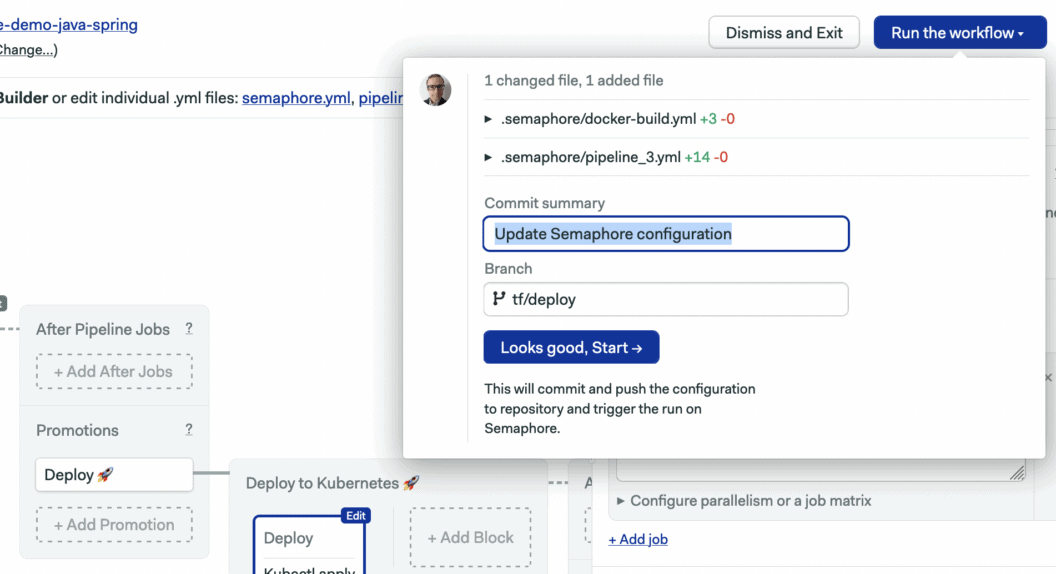

Click on the Run the workflow button and then choose Start.

After a couple of minutes, you should have a new image ready to use in Docker Hub:

Let’s try the new image. Pull it from your machine:

$ docker pull YOUR_DOCKER_USER/semaphore-demo-java-spring:latestAnd start it with:

$ docker run -it -p 8080:8080 YOUR_DOCKER_USER/semaphore-demo-java-springContinuous Deployment to Kubernetes

You may recall that our application has a glaring flaw: the lack of data persistence — our precious data is lost across reboots. Fortunately, this is easily fixed by adding a new profile with a real database. For the purposes of this tutorial, I’ll choose MySQL. You can follow me with MySQL on this section or choose any other backend from the Hibernate providers page.

First, edit the Maven manifest file (pom.xml) to add a production profile inside the <profiles> … </profiles> tags:

<profile>

<id>production</id>

<properties>

<maven.test.skip>true</maven.test.skip>

</properties>

</profile>Then, between the <dependencies> … </dependencies> tags, add the MySQL driver as a dependency:

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>Finally, create a production-only properties file at src/main/resources/application-production.properties:

spring.jpa.properties.hibernate.dialect=org.hibernate.dialect.MySQL55Dialect

spring.datasource.url=jdbc:mysql://${DB_HOST:localhost}:${DB_PORT:3306}/${DB_NAME}

spring.datasource.username=${DB_USER}

spring.datasource.password=${DB_PASSWORD}We must avoid placing secret information such as passwords in GitHub. We’ll use environment variables and decide later how we’ll pass them along.

Push the changes to GitHub:

$ git add src/main/resources/application-production.properties

$ git add pom.xml

$ git commit -m "add production profile"

$ git push origin masterNow our application is ready for prime time.

Preparing your Cloud to run Microservices

In this section, we’ll create the database and Kubernetes clusters. Log in to your favorite cloud provider and provision a MySQL database and a Kubernetes cluster.

Creating the database

Create a MySQL database with a relatively new version (i.e., 5.7 or 8.0+). You can install your own server or use a managed cloud database. For example, AWS has RDS and Aurora, and Google Cloud has Google SQL.

Once you have created the database service:

- Create a database called “demodb”.

- Create a user called “demouser” with, at least, SELECT, INSERT, UPDATE permissions.

- Take note of the database IP address and port.

Once that is set up, create the user tables:

CREATE TABLE `hibernate_sequence` (

`next_val` bigint(20) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `users` (

`id` bigint(20) NOT NULL,

`created_date` datetime DEFAULT NULL,

`email` varchar(255) DEFAULT NULL,

`modified_date` datetime DEFAULT NULL,

`password` varchar(255) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;Creating the Kubernetes cluster

Most cloud providers offer Kubernetes clusters, for instance, AWS has Elastic Kubernetes Service (EKS) and Google Cloud has its Kubernetes Engine. I’ll try to keep this tutorial vendor-agnostic so you, dear reader, have the freedom to choose whichever alternative best suits your needs.

In regards to the cluster node sizes, this is a microservice, so requirements are minimal. The most modest machine will suffice, and you can adjust the number of nodes to your budget. If you want to have rolling updates—that is, upgrades without downtime—you’ll need at least two nodes, and three is best.

Working with Kubernetes

On paper, Kubernetes deployments are simple and tidy: you specify the desired final state and let the cluster manage itself. And it can be, once we can understand how Kubernetes thinks about:

- Pods: a pod is a team of containers. Containers in a pod are guaranteed to run on the same machine.

- Deployments: a deployment monitors pods and manages their allocation. We can use deployments to scale up or down the number of pods and perform rolling updates.

- Services: services are entry points to our application. Service exposes a fixed public IP for our end users, they can do port mapping and load balancing.

- Labels: labels are short key-value pairs we can add to any resource in the cluster. They are useful to organize and cross-reference objects in a deployment. We’ll use labels to connect the service with the pods.

Did you notice that I didn’t list containers as an item? While it is possible to start a single container in Kubernetes, it’s best if we think of them as tires on a car, they’re only useful as parts of the whole.

Let’s start by defining the service. Create a manifest file called deployment.yml with the following contents:

apiVersion: v1

kind: Service

metadata:

name: semaphore-demo-java-spring-lb

spec:

selector:

app: semaphore-demo-java-spring-service

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

We find the service definition under the spec tree: a network load balancer that forwards HTTP traffic to port 8080. Add the following contents to the same file, separated by three hyphens:

apiVersion: apps/v1

kind: Deployment

metadata:

name: semaphore-demo-java-spring-service

spec:

replicas: 3

selector:

matchLabels:

app: semaphore-demo-java-spring-service

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

template:

metadata:

labels:

app: semaphore-demo-java-spring-service

spec:

imagePullSecrets:

- name: dockerhub

containers:

- name: semaphore-demo-java-spring-service

image: ${DOCKER_USERNAME}/semaphore-demo-java-spring:$SEMAPHORE_WORKFLOW_ID

imagePullPolicy: Always

env:

- name: ENVIRONMENT

value: "production"

- name: DB_HOST

value: "${DB_HOST}"

- name: DB_PORT

value: "${DB_PORT}"

- name: DB_NAME

value: "${DB_NAME}"

- name: DB_USER

value: "${DB_USER}"

- name: DB_PASSWORD

value: "${DB_PASSWORD}"

readinessProbe:

initialDelaySeconds: 60

httpGet:

path: /login

port: 8080The template.spec branch defines the containers that make up a pod. There’s only one container in our application, referenced by its image. Here we also pass along the environment variables.

Spec also defines how the cluster authenticates with the docker registry. In the manifest we reference a secret called dockerhub. For this to work, you’ll have to store your Docker Hub username and password in the cluster with the following command:

$ kubectl create secret docker-registry dockerhub \

--docker-server=docker.io \

--docker-username=YOUR_DOCKER_HUB_USERNAME \

--docker-password=YOUR_DOCKER_HUB_PASSWORD

secret/dockerhub createdThe total number of pods is controlled with replicas. You should set it to the number of nodes in your cluster.

The update policy is defined in strategy. A rolling update refreshes the pods in turns, so there is always at least one pod working. The test used to check if the pod is ready is defined with readinessProbe.

Selector, labels and matchLabels work together to connect the service and deployment. Kubernetes looks for matching labels to combine resources.

You may have noticed that were are using special tags in the Docker image. In the Docker Build pipeline, we tagged all our Docker images as “latest”. The problem with the latest is that we lose the capacity to version images; older images get overwritten on each build. If we have difficulties with a release, there is no previous version to roll back to. So, instead of the latest, it’s best to use some unique value. We’ll use the special environment variable $SEMAPHORE_WORKFLOW_ID which can be shared among all pipelines and is unique for every run.

Preparing for Continuous Deployment for the Microservice

In this section, we’ll configure Semaphore CI/CD for Kubernetes deployments.

Creating more secrets

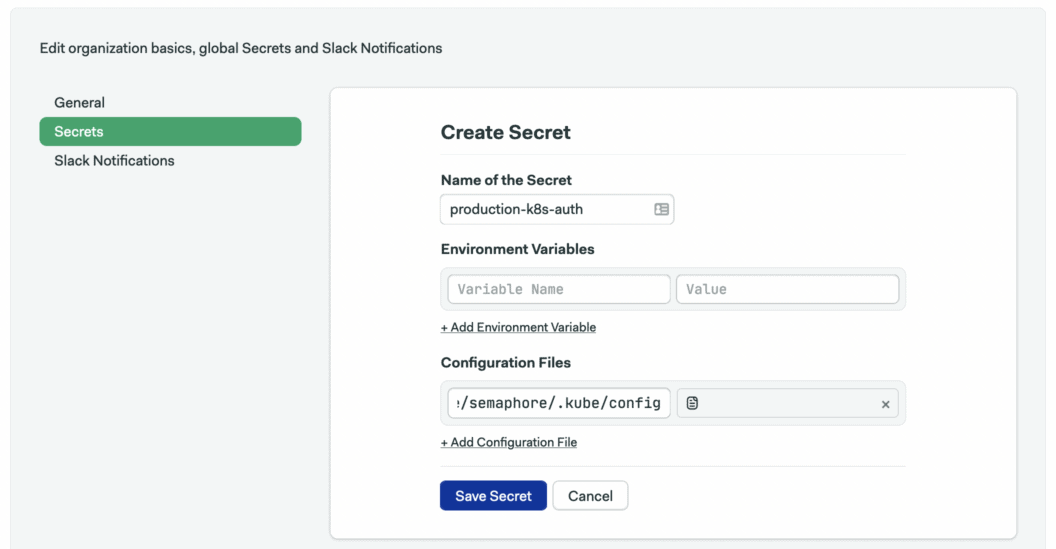

Earlier you created a secret with your Docker Hub credentials. Here, you’ll need to repeat the procedure with two more pieces of information.

Database user: a secret that contains your database username, password, and other connection details.

Kubernetes cluster: a secret with the Kubernetes connection parameters. The specific details will depend on how and where the cluster is running. For example, if a kubeconfig file was provided, you can upload it to Semaphore as follows:

Creating the deployment pipeline

We’re almost done. The only thing left is to create a Deployment Pipeline to:

- Generate manifest: populate the manifest with the real environment variables.

- Make a deployment: send the desired final state to the Kubernetes cluster, making our microservice Spring Boot app available.

Depending on where and how the cluster is running, you may need to adapt the following code. If you only need a kubeconfig file to connect to your cluster, great, this should be enough. Some cloud providers, however, need additional helper programs.

For instance, AWS requires the aws-iam-authenticator when connecting with the cluster. You may need to install programs—you have full sudo privileges in Semaphore—or add more secrets. For more information, consult your cloud provider documentation.

Open the Workflow Builder again by clicking on the Edit the workflow button.

Scroll right to the end of the last pipeline and click on the Add First Promotion button.

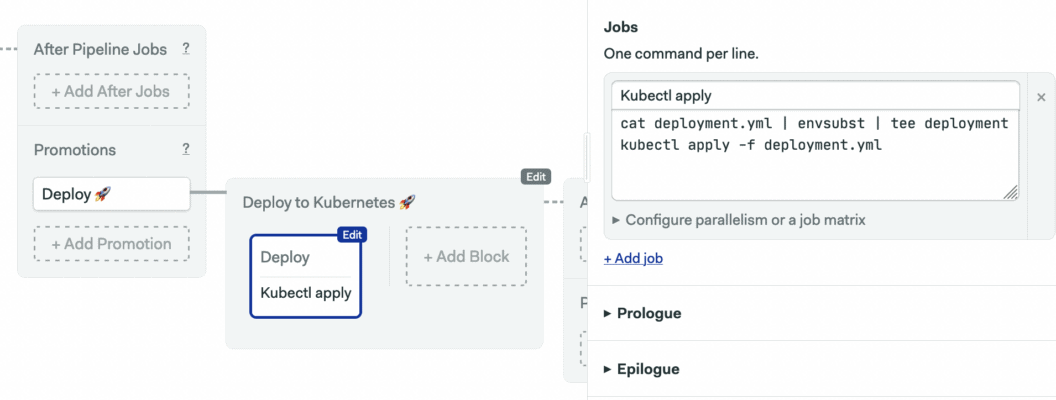

Let’s call the new pipeline “Deploy to Kubernetes”. Click on the first block on the new pipeline and fill in the job details as follows:

- Secrets: select all the secrets you created.

- dockerhub

- production-db-auth

- production-k8s-auth

- Environment variables: add any special variables required by your cloud provider such as

DEFAULT_REGIONorPROJECT_ID. - Prologue: add a

checkoutcommand and fill in any cloud-specific login, install or activation commands (gcloud, aws, etc.) - Job: generate the manifest and make the deployment with the following commands:

cat deployment.yml | envsubst | tee deployment.yml

kubectl apply -f deployment.yml

Since we are abandoning the latest tag, we need to change to the “Docker Build” pipeline. The Docker images must be tagged with the same workflow id across all pipelines.

Scroll left to the “Build and deploy Docker container” block and replace the last two commands in the job:

docker build \

--cache-from "$DOCKER_USERNAME"/semaphore-demo-java-spring:latest \

--build-arg ENVIRONMENT="${ENVIRONMENT}" \

-t "$DOCKER_USERNAME"/semaphore-demo-java-spring:latest .

docker push "$DOCKER_USERNAME"/semaphore-demo-java-spring:latestWith these two commands:

docker build \

--cache-from "$DOCKER_USERNAME"/semaphore-demo-java-spring:lastest \

--build-arg ENVIRONMENT="${ENVIRONMENT}" \

-t "$DOCKER_USERNAME"/semaphore-demo-java-spring:$SEMAPHORE_WORKFLOW_ID .

docker push "$DOCKER_USERNAME"/semaphore-demo-java-spring:$SEMAPHORE_WORKFLOW_IDYour first deployment

At this point, you’re ready to do your first deployment. Press Run the workflow and click on Start. This should start the microservice Spring Boot app:

Allow a few minutes for the pipelines to do their work. The workflow will stop at the “Docker build” block. Click on Promote to deploy to Kubernetes:

You can monitor the process from your cloud console or using kubectl:

$ kubectl get all -o wideTo retrieve the external service IP address, check your cloud dashboard page or use kubectl:

$ kubectl get servicesThat’s it. The service is running, and you now have a complete CI/CD process to deploy your application.

Wrapping up

Now You’ve learned how Semaphore and Docker can work together to automate Kubernetes deployments. Feel free to fork the demo project and adapt it to your needs. Kubernetes developers are in high demand and you just did your first Kubernetes deployment, way to go!

Some ideas to have fun with:

- Make a change and push it to GitHub. You’ll see that Kubernetes does a rolling update, restarting one pod at a time until all of them are running the newer version.

- Add more types of tests to your CI.

- Try implementing a more complicated setup. Create additional services and run them as separate containers in the same pod.

- Run the tests inside the Docker image.

Then, learn more about CI/CD for Docker and Kubernetes with our other step-by-step tutorials:

- Download our free ebook: CI/CD with Docker and Kubernetes

- A Step-by-Step Guide to Continuous Deployment on Kubernetes

- Learn all about CI/CD on Docker and Kubernetes

Part 1 and Part 2 of this tutorial were originally published on JAXenter.