Docker Swarm and Kubernetes are both container orchestration technologies. Container orchestration automates the provisioning, deployment, networking, scaling, availability, and lifecycle management of containers.

Container orchestration technologies make it simpler to deploy and manage containerized applications, which makes it easier to scale and run complex applications that have large numbers of containers. Containers enable features like load balancing, service discovery, scalability, self-healing, and rolling updates, which simplify the process of managing containerized applications across many hosts or even various cloud providers.

Some examples of container orchestration technologies include:

- Docker Swarm: a native utility for scheduling and clustering Docker containers.

- Kubernetes: a native clustering and scheduling tool for Docker containers. Kubernetes is an open-source container orchestration solution that was created by Google.

- Apache Mesos: Apache Mesos is a free cluster manager that enables you to execute a range of distributed applications, including containerized apps, on a cluster of computers. Mesos has attributes like fault tolerance, isolation, and dynamic resource allocation.

- Amazon ECS: a container orchestration platform from Amazon Web Services that is fully managed.

- Google Kubernetes Engine: a Google Cloud Platform-based Kubernetes version that is managed (GCP).

- Amazon Elastic Kubernetes Service (EKS) is another popular container orchestration platform from Amazon Web Services that is fully managed and based on Kubernetes. It allows you to easily run, manage, and scale containerized applications using Kubernetes on the AWS cloud.

For the purposes of this article, we will focus on Docker Swarm and Kubernetes, which are the most popular container orchestration technologies.

What is Docker Swarm?

Docker Swarm is a container orchestration tool that allows you to manage a cluster of Docker hosts and deploy containerized applications across them.

A comprehensive and flexible system for managing and deploying containerized applications at scale is offered by Docker Swarm. You can benefit from containerization while also guaranteeing that your applications are highly available, scalable, and easy to maintain.

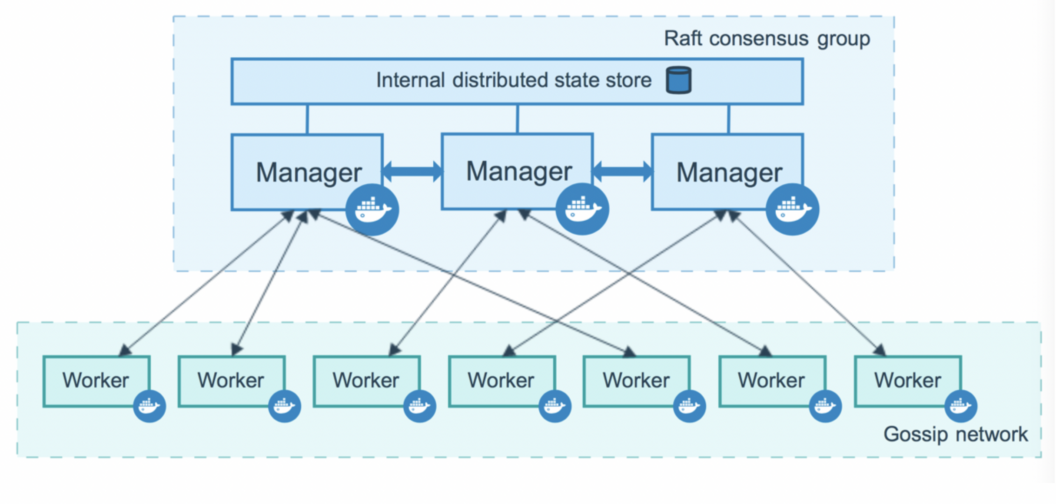

Using Docker swarm, services can be deployed and managed, scaled up or down as necessary, and their performance and health can be tracked. One or more Docker hosts connected into a swarm make up a Docker Swarm cluster. One or more hosts are designated as manager nodes inside the swarm. These hosts are in charge of managing the swarm’s status and organizing the distribution of tasks and services among the worker nodes.

- Manager nodes: Manager nodes are responsible for managing the Swarm cluster and orchestrating the deployment of services. They maintain the desired state of the services, handle service scaling and rolling updates, and also communicate with other nodes in the cluster to coordinate tasks. For a swarm, Docker recommends having three to seven manager nodes, with five being ideal for most use cases.

- Worker nodes: Worker nodes are in charge of managing the containers that make up the cluster’s deployed services. They receive tasks in the form of Docker containers from the manager nodes and carry them out. Depending on the workload and resource needs of the deployed services, the number of worker nodes in a Docker Swarm cluster can be scaled up or down.

Below is a diagram of how nodes work in Docker Swarm. Please refer to the official website documentation for a more comprehensive understanding.

Advantages of Docker Swarm

Docker Swarm offers several advantages for managing and deploying containerized applications across a cluster of Docker hosts. Here are some of its advantages:

- Your applications and services can be scaled up or down, based on the workload and resource demands. The workload will be automatically distributed across the available nodes, which lets you add or remove nodes from the swarm as necessary.

- A highly available and fault-tolerant infrastructure for launching containerized applications is available. If one node fails, Docker Swarm will automatically spread the workload to the remaining nodes, allowing you to run multiple instances of your services across the swarm.

- A built-in load balancer in Docker Swarm can automatically route incoming requests to the correct service instances that are deployed on the swarm.

- Rolling updates in Docker Swarm let you upgrade your services and apps with no downtime. With Docker Swarm, the service will be updated one instance at a time while ensuring that it is still accessible.

- Docker Swarm provides built-in service discovery, allowing your applications to discover and communicate with each other without the need for external service discovery mechanisms.

- Docker Swarm is simple to install and use because it comes with the Docker engine. In comparison to other container orchestration tools, the API is also more straightforward to learn.

- Docker Swarm integrates seamlessly with the Docker ecosystem, making it easy to use with other Docker tools and services.

Drawbacks of Docker swarm

Although Docker Swarm offers a variety of advantages for managing and deploying containerized applications, here are some key drawbacks to consider in Docker Swarm;

- Compared to other container orchestration tools like Kubernetes, Docker Swarm offers fewer features. Although it offers fundamental tools for scaling as well as managing containers, it might fall short in more complex use cases.

- There are fewer possibilities for modifying the behavior and configuration of the swarm compared to other container orchestration technologies.

- The maximum number of manager nodes for Docker Swarm is 7, which might not be enough for large deployments. In comparison to other container orchestration solutions, it also has more constrained scaling capabilities.

- While Docker Swarm excels at managing Docker containers, its support for other container types is somewhat constrained.

What is Kubernetes?

The term “K8s” for Kubernetes, an open-source container orchestration platform, refers to the number of letters between the letters “k” and “s.” Kubernetes automates the deployment, scaling, and management of containerized applications. The Cloud Native Computing Foundation(CNCF) maintains Kuberentes, though it was originally created by Google.

With Kubernetes, you can manage and scale containers across a cluster of hosts and define your application as a collection of containers with all of its dependencies and requirements. A robust tool for operating containerized applications in production environments, it also offers features like automatic rollouts and rollbacks, self-healing, and horizontal scaling.

A master node, which controls the cluster’s general state, and one or more worker nodes, which run the application containers, make up Kubernetes. It is a good practice to have at least three master nodes in a production environment to ensure high availability and fault tolerance. With multiple master nodes, the control plane components are replicated across the nodes, ensuring that the cluster can continue to operate even if one or more nodes fail. The worker nodes run the application containers and connect with the master nodes to receive updates and directives, while the master nodes provide a uniform API for controlling the cluster’s state.

Advantages of Kubernetes

Kubernetes offers a robust and adaptable system for handling containerized applications that helps you in developing and streamlining the application deployment processes, enhancing scalability, and boosting resilience. Below are several advantages of using Kubernetes;

- Kubernetes offers numerous configuration options, including support for various container runtimes and integration with other tools in the container ecosystem, for deploying and managing applications.

- Kubernetes can automatically recover from node failures or other disruptions thanks to its built-in high availability and self-healing features.

- Kubernetes provides a consistent deployment environment for applications, allowing them to be deployed across different cloud providers or on-premises data centers.

- To manage containerized applications at scale, Kubernetes offers the quick and simple ability to scale applications up or down in response to demand.

- Rolling updates, load balancing, and service discovery are among the numerous containerized application deployment and management tasks that Kubernetes automates.

- Kubernetes has a large and active community of developers and users, providing a wealth of resources, support, and tooling.

Drawbacks of Kubernetes

Organizations should carefully assess the costs and trade-offs involved in implementing this platform, particularly concerning complexity and resource requirements, even if Kubernetes can offer considerable advantages for managing containerized applications.

- The learning curve for Kubernetes can be challenging, especially for developers or operators who are unfamiliar with distributed systems and container management.

- Especially for bigger clusters, Kubernetes can be resource-intensive, necessitating significant computation and storage resources to function well.

- Although Kubernetes automates many procedures in the deployment and maintenance of applications, it can also add to operational complexity, especially when troubleshooting and diagnosing problems, For example, let’s say that you have a Kubernetes cluster running a web application that is experiencing intermittent connectivity issues. To diagnose the problem, you would need to investigate all the different layers of the application stack, including the pod, container, service, and networking layers, to determine where the issue is occurring. This could involve looking at logs from multiple sources, including the Kubernetes API server, the Kubelet running on each worker node, the application container, and any supporting services or tools.

- Although Kubernetes works with a wide variety of container runtimes and technologies, some old applications or systems might not function with Kubernetes or need a lot of reconfiguration. For example, in order to migrate a monolithic application that was not designed with containerization in mind to Kubernetes, you would need to break it down into smaller, more modular components that can be containerized.

- Kubernetes has many security capabilities, but it also necessitates a lot of management and configuration effort to guarantee that the cluster and applications are adequately protected.

Kubernetes vs. Docker Swarm: A comparison

| Comparison | Docker Swarm | Kubernetes |

|---|---|---|

| Installation | Setup is quick and simple (assuming you already use Docker) | A bit complex in that you must install (and learn to utilize) kubectl |

| GUI | No pre-built dashboards but you can incorporate a tool from a third party | Very detailed dashboards |

| Scalability | Values growing rapidly (5 times faster than K8s) as opposed to scaling automatically | Scaling based only on traffic |

| Learning curve | Simple to use and lightweight, but with limited capabilities | High learning curve but it has more features |

| Load balancing | Internal load balancing | No integrated system for automatic load balancing |

| CLI | Integrated Docker CLI, which in some use situations may limit the functionality | Needs a separate CLI |

| Cluster setup | Simple to begin a cluster | Clusters are difficult to establish, but once they do, they are tremendously powerful. |

| Use case | Simple applications that are straightforward to install and manage | Apps with high demand and a complicated configuration |

| Community | Active community | Generally well-liked, however, the user base is shrinking |

Installation and setup

Linux, macOS, and Windows are just a few of the operating systems on which Kubernetes and Docker Swarm can be installed. A cloud provider, a Kubernetes distribution, or manually establishing a cluster using kubeadm are just a few of the installation options offered by Kubernetes. Users must create a cluster of nodes that run the Kubernetes software after the program has been installed. For managing and deploying containerized applications, Kubernetes provides several tools, like kubectl.

In contrast, Docker Machine, Docker Compose, or manually building up a cluster using Docker Swarm commands are just a few of the installation options offered by Docker Swarm. Users of Docker Swarm must additionally build up a swarm of nodes running the Docker Swarm program. For delivering and administering containerized applications, Docker provides several tools, including docker service and docker stack.

Generally, the installation and setup procedures for Kubernetes and Docker Swarm are not comparable. For the deployment and management of containerized applications, Kubernetes provides extra install options and more advanced tooling while Docker Swarm provides clearer tooling options with an easier installation.

Popularity

Due to its open-source status, community support, industry adoption, extensibility, and feature-rich offerings, Kubernetes has grown in popularity since it was first released by Google in 2014. In many industries, Kubernetes has replaced other container orchestration systems as the standard.

On the other hand, Docker Swarm has experienced a fall in popularity in recent years as a result of its limited feature set, weaker community support, and lower level of industry acceptance. While some businesses continue to utilize Docker Swarm, Kubernetes has become the most well-liked and used container orchestration software.

In summary, Kubernetes and Docker Swarm have their strengths and weaknesses, Kubernetes has gained more popularity and industry adoption, while Docker Swarm has become less popular in recent years.

Load balancing

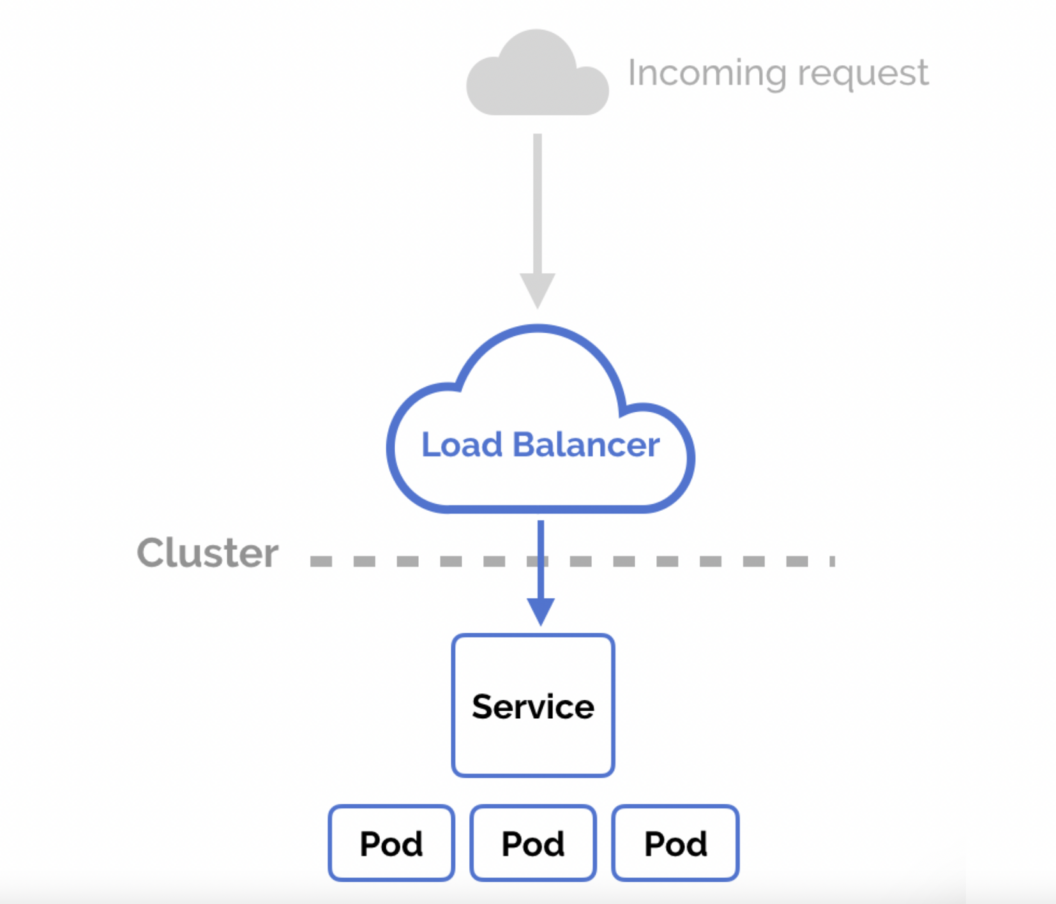

Using the Kubernetes Service resource, Kubernetes provides built-in functionality for load balancing. A set of pods can have a consistent IP address and DNS name thanks to Kubernetes Service, which can also route inbound traffic to those pods. Several load-balancing methods, such as round-robin, session affinity and IP hash, are offered by Kubernetes Service. It also interfaces with outside load balancers to offer more sophisticated load-balancing functionalities.

Docker Swarm provides built-in support for load balancing. Docker Swarm Mode uses an ingress routing mesh to route inbound traffic to the correct service within the swarm. Several load balancing methods, such as round-robin and least connections, are used by Docker Swarm Mode. To offer sophisticated load-balancing features, Docker Swarm may also integrate with external load balancers.

Typically, load balancing is integrated into both Kubernetes and Docker Swarm, albeit the algorithms they use to distribute incoming traffic differ. In comparison to Docker Swarm, Kubernetes Service has more sophisticated load-balancing features and can integrate with a wider range of external load balancers.

Scalability

Kubernetes is made to horizontally scale applications, new pods can be added to a deployment or replica set to adapt to an increase in workload or traffic. To dynamically scale up or down applications in response to demand, Kubernetes offers a number of tools, including the Horizontal Pod Autoscaler (HPA), Cluster Autoscaler, and Vertical Pod Autoscaler (VPA). Kubernetes is appropriate for large-scale deployments since it can scale over several nodes.

Moreover, Docker Swarm is made to horizontally scale applications, so it can deploy more replicas to a service to manage increased workloads or traffic. Docker Service Scale, a scaling tool offered by Docker Swarm, enables users to scale services up or down in response to demand. Docker Swarm is suitable for massive deployments since it can scale over several machines.

Overall, both Kubernetes and Docker Swarm provide horizontal scaling capabilities and can scale across multiple nodes. However, Kubernetes offers more advanced scaling tools, including HPA, Cluster Autoscaler, and VPA, making it more suitable for complex and demanding workloads.

Networking

The flat network architecture used by Kubernetes allows containers to connect with one another and with services within the cluster. Kubernetes offers a strong and flexible networking approach. ClusterIP, NodePort, and LoadBalancer are just a few of the networking options that Kubernetes provides to expose services and transport traffic across pods. In order to manage traffic within the cluster and interface with other networks, Kubernetes additionally offers network policies. See the documentation here.

Calico, Flannel, and Cilium are just a few of the network plugins that Kubernetes offers to add extra networking features including enhanced security policies, multi-cluster connectivity, and service mesh functionality.

Docker Swarm offers a flexible and scalable networking approach that makes use of an overlay network to let containers communicate with one another across various swarm nodes. To route communication between services and containers, Docker Swarm provides a variety of networking options, such as ingress and overlay networks.

With the help of a DNS-based load-balancing mechanism, Docker Swarm provides built-in support for service discovery and load balancing. This method divides incoming traffic among service replicas.

Overall, robust networking capabilities and a variety of options for network design and control are offered by both Kubernetes and Docker Swarm. However, Kubernetes is better suited for complicated and demanding workloads since it provides a wider range of networking options and enables more sophisticated network plugins.

High availability

The API server, controller manager, and scheduler are three master node components of Kubernetes that offer high availability. These components are kept highly available by Kubernetes via a variety of techniques, including replication and clustering.

Kubernetes stores cluster state data in Etcd, a distributed key-value store. By duplicating data over several cluster nodes, Etcd offers high availability, guaranteeing that the cluster state is always accessible even in the event that some nodes fail.

Via Docker Swarm mode design, which consists of management and worker nodes, Docker Swarm offers high availability. By replication and clustering, Docker Swarm assures high availability of the manager nodes. Docker Swarm additionally uses a distributed key-value store to store cluster state information, guaranteeing that the cluster state is always available even if some nodes fail.

Performance

Kubernetes has been tested to scale up to thousands of nodes in a cluster and is renowned for its capacity to manage large-scale deployments. It provides cutting-edge features like rolling updates and canary deployments, enabling updates with zero downtime and optimum resource utilization. Moreover, Kubernetes features a strong network architecture with integrated load balancing and cutting-edge networking plugins like Calico and Flannel.

Docker Swarm is recognized for being straightforward and user-friendly. It is an excellent option for smaller deployments because it has a smaller footprint than Kubernetes. Load balancing and service discovery capabilities are also included in Docker Swarm, making it simple to deploy and operate containerized applications.

Both Kubernetes and Docker Swarm have demonstrated strong performance in a number of tests when it comes to performance benchmarks. The precise performance measurements, however, may change based on the use case and workload being tested.

Deployment

Applications are deployed using Kubernetes as a collection of containers that are controlled by a deployment, which is a higher-level object. The application’s desired state, including the number of replicas, the container image to use, and other configuration options are specified in the deployment object. Even in the face of failures or changes in demand, Kubernetes automatically manages the deployment to guarantee that the desired state is maintained. See specific information here.

Applications are deployed in Docker Swarm as a collection of services that define the state that the application should be in, as well as how it should be duplicated and load balanced across the swarm. Similar to Kubernetes, Docker Swarm offers automatic failover and self-healing features to keep the application responsive and available. See official documentation.

DNS-based service discovery

DNS-based service discovery allows services to be accessed by their name rather than their IP address, which makes it easier to manage and scale applications in a dynamic environment.

The Kubernetes DNS service, which is a platform feature, offers DNS-based service discovery in Kubernetes. Each service and endpoint in the cluster receives an automatic DNS entry from Kubernetes, and other services or applications can use the Kubernetes DNS service to resolve these entries.

SwarmKit, an embedded DNS resolver in Docker Swarm, offers DNS-based service discovery. Each service and job in the swarm receives a DNS entry from SwarmKit, and these entries can be resolved by other services or programs using the integrated DNS resolver. See more here.

Using their respective DNS-based systems, Kubernetes and Docker Swarm both enable load balancing and automatic service discovery. Nevertheless, Kubernetes has more sophisticated features like DNS policies that let administrators alter the way DNS resolution occurs. Although Docker Swarm implements DNS-based service discovery more simply, it may be simpler to handle in smaller or less complex setups.

Graphical User Interface (GUI)

Kubernetes features a web-based dashboard for its graphical user interface (GUI), which offers a visual depiction of the cluster’s state and enables users to control and monitor their applications. Plugins and extensions can also be used to personalize the interface. However, although Docker Swarm lacks a native GUI, there are third-party tools like Portainer and Docker Universal Control Plane that may be used to manage and monitor Docker Swarm clusters. The decision between Kubernetes and Docker Swarm for GUI ultimately comes down to personal preference and the level of control and visualization needed for the user’s containerized applications.

Docker Swarm vs Kubernetes: which works better?

The choice between Docker Swarm and Kubernetes ultimately depends on the specific needs of the user’s containerized application environment. Both platforms have their strengths and weaknesses.

Docker Swarm is a suitable option for small to medium-sized deployments because it’s easier to set up and administer. In addition, it is easier to understand and uses fewer resources than Kubernetes to operate.

Kubernetes, on the other hand, is more sophisticated, has a steeper learning curve, and offers a greater degree of flexibility and scalability, making it better suited for larger and more complicated installations. Kubernetes has a larger and more active community than competing technologies, which leads to a greater variety of available resources and third-party integrations.

Conclusion

No matter which platform you use, it is crucial to thoroughly weigh your alternatives and pick the one that best suits your needs. The smooth and secure operation of your deployment will also require regular maintenance and updates. For better insight, see the official documentation.

References: